Toward Wave-based Sound Synthesis for Computer Animation

ACM SIGGRAPH 2018

Abstract

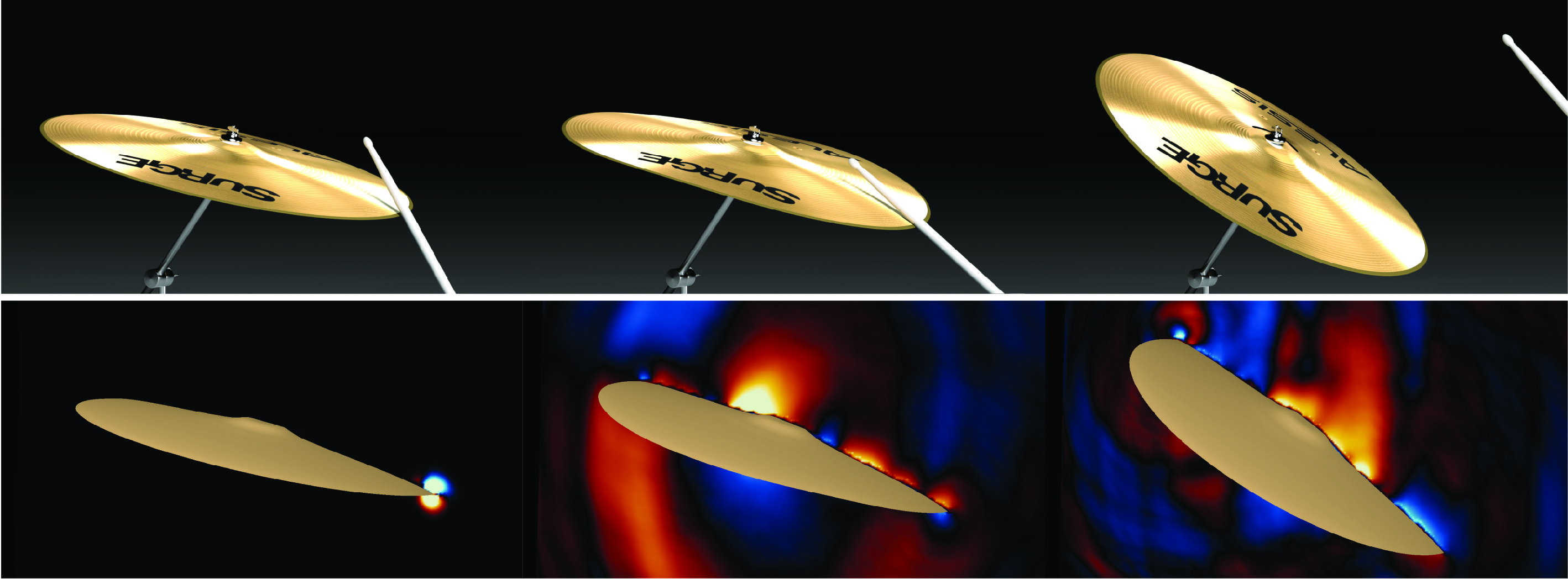

We explore an integrated approach to sound generation that supports a wide variety of physics-based simulation models and computer-animated phenomena. Targeting high-quality offline sound synthesis, we seek to resolve animation-driven sound radiation with near-field scattering and diffraction effects. The core of our approach is a sharp-interface finite-difference time-domain (FDTD) wavesolver, with a series of supporting algorithms to handle rapidly deforming and vibrating embedded interfaces arising in physics-based animation sound. Once the solver rasterizes these interfaces, it must evaluate acceleration boundary conditions (BCs) that involve model and phenomena-specific computations. We introduce acoustic shaders as a mechanism to abstract away these complexities, and describe a variety of implementations for computer animation: near-rigid objects with ringing and acceleration noise, deformable (finite element) models such as thin shells, bubble-based water, and virtual characters. Since time-domain wave synthesis is expensive, we only simulate pressure waves in a small region about each sound source, then estimate a far-field pressure signal. To further improve scalability beyond multi-threading, we propose a fully time-parallel sound synthesis method that is demonstrated on commodity cloud computing resources. In addition to presenting results for multiple animation phenomena (water, rigid, shells, kinematic deformers, etc.) we also propose 3D automatic dialogue replacement (3DADR) for virtual characters so that pre-recorded dialogue can include character movement, and near-field shadowing and scattering sound effects.

Links

- Paper [high quality (98M)] [compressed (5.6M)]

- Supplemental Video [high quality (174M)]

- Presentation [Keynote (507M)]

Citation

Video

Acknowledgements

We thank the anonymous reviewers for their constructive feedback. We acknowledge assistance from Jeffrey N. Chadwick with the thin-shell software, Davis Rempe for video rendering, Yixin Wang and Kevin Li for early discussions, and Maxwell Renderer for academic licenses. We acknowledge support from the National Science Foundation (DGE-1656518), and Google Cloud Platform compute resources; Jui-Hsien Wang’s research was supported in part by an internship and donations from Adobe Research. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation.