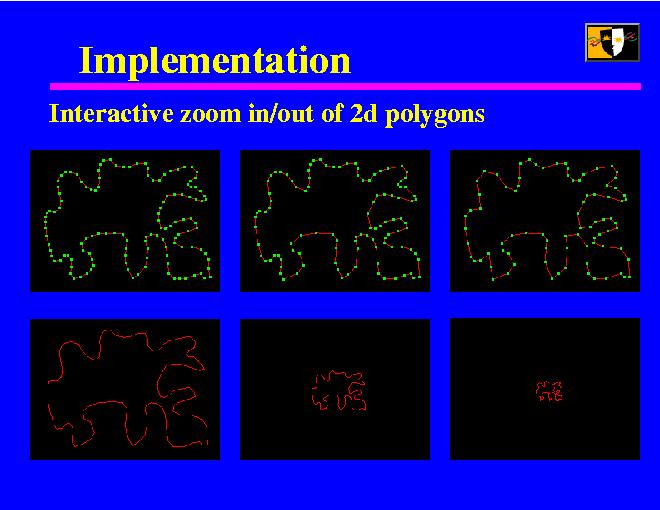

I implemented a small program that allows the user to interactively zoom in and out of a 2d polygon. The images in the top row show the geometry that is actually drawn, while the bottom row shows what the user sees on the screen.

The user determines two distances: if the polygon is closer than the close distance, the full polygon is drawn, if it is farther than the far distance, only the base version is drawn. In between, the number of wavelets used is interpolated logarithmically.

A better, though more complicated approach would be to add just enough wavelets so that the approximation differs from the real at most by a given threshold, say, one pixel.

We don't add wavelets discretely, since that would easily cause visible popping while zooming. Instead, the wavelets are linearly interpolated to their full values over several steps.