(The image is by courtesy of Andrei Khodakovsky, Peter Schröder and Wim Sweldens, CalTech)

Geometry Compression

Sam Liang

In addition, with the rapid development of the Internet, more and more graphics applications are running across the Internet, such as distributed collaborated modeling and multi-user games. It is essential for such distributed applications to be able to transmit geometry efficiently, which in turn require good geometry compression schemes.

Furthermore, the speed gap between CPU and memory is becoming even larger. Interactive animation of geomtric models require that large amount of triangles be efficiently loaded into the on-chip cache across the bus. Current bottleneck is the memory bandwidth, which also craves for good geometry compression algorithms to reduce the traffic to the slow memory.

This web page collects some information on Geometry Compression, including

some important papers, well-known researchers, useful web links, and other

relevant information.

Geometry: Vertex positions.

Connectivity: Specifies the triangles.

Properties: Colors, normals, etc.

Compressed form of the three components in the input.

Multiresolution Progressive Compression: Hoppe, Taubin, et al., Khodakovsky, et al. Prediction of vertex positions is performed in a hierarchical fashion. Connectivity bits are around 4-10bits per vertex.

Lossless vs. Lossy Compression.

Arbitrary Meshes vs. Canonical Meshes.

Static Meshes vs. Dynamic Meshes.

2. Mike Chow, "Optimized

Geometry compression for real-time rendering", Proceedings on the IEEE

Visualization'97.

Mike Chow presents an algorithm to efficiently produce generalized

triangle meshes. Their meshifying algorithms and the variable compression

method achieve compression ratios of 30 and 37 to one over ASCII encoded

formats and 10 and 15 to one over binary encoded triangle strips. Their

experimental results show a dramatically lowered memory bandwidth required

for real-time visualization of complex datasets.

3. Gabriel Taubin and Jarek

Rossignac, "Geometric

Compression Through Topological Surgery", ACM Transactions on Graphics,

Vol. 17, No.2, April 1998, pp.84-115.

In their algorithms, vertex positions are quantized within the desired

accuracy, a vertex spanning tree is used to predict the position of each

vertex from its ancestors in the tree, and the correction vectors are entropy

encoded. Properties, such as normals, colors, and texture coordinates,

are compressed in a similar manner. The connectivity is encoded with no

loss of information to an average of less than two bits per triangle.

Look at Jarek Rossignac's publication

page for more papers by him.

4. Gabriel Taubin, Andre Gueziec, Willian Horn, Francis Lazarus. "Progressive

Forest Split Compression". Proc. Siggraph 1998, pp. 123-132.

This paper introduces a new adaptive refinement scheme for string and

transmitting manifold triangular meshes in progressive and highly compressed

form. It achives high compression rate -- a forest split operation doubling

the number n of triangles of a mesh requires a maximum of approximately

3.5n bits to prepresent the connectivity changes. The paper also shows

how any surfaces simplifcation algorithm based on edge collapses can be

modified to convert single resolution triangular meshes to the PFS format.

Click here to see a more detailed critique

by Sam Liang.

5. Touma and Gotsman, "Triangle

Mesh Compression", Graphics Interface'98. Vancuver, Canada.

Mesh connectivity is encoded in a lossless manner, based on properties

of planar graphs. Vertex coordinate data is quantized and then losslessly

encoded using elaborate prediction schemes and entropy coding. Vertex normal

data is also quantized and then losslessly encoded using prediction methods.

6. Stefan Gumhold. "Real

Time Compression of Triangle Mesh Connectivitity". Siggraph'98.

Their algorithm has some nice properties, such as: i) simple implementation,

potentially in hardware; ii) very fast compression speed: 750,000 triangles

per second on Pentium with 300 MHz; iii) Even faster decompression: 1,500,000

triangles per second; iv) Connectivity compression to about 1.6 bits/triangle

(Huffman encoding) or 1.0 bits/triangle (adaptive Arithmetic coding).

7. S. Gumhold and Klei, "Compression of discrete multiresolution models", Technical Report WSI-98-1, Wilhelm-Schickard-Institut für Informatik, University of Tübingen, Germany, January 1998.

8. J. Snoeyink and M. Kreveld. "Linear-Time

Reconstruction of Delaunay Triangulations with

Applications".

Proc. European Symposium on Algorithms, 1997.

Their method can be seen as a form of geometry compression and progressive

expansion. Using their method, the structure of a terrain model can be

encoded in a permutation of the points, and can be reconstructed incrementally

in linear time.

9. J. Lengyel.

"Compression

of Time-Dependent Geometry", ACM 1999 Symposium on Interactive 3D Graphics.

This Paper presents a new view of time- dependent geometry as a streaming

media type and presents new techniques for taking advantage of the large

amount of coherence in time.

10. Hugues Hoppe.

"Progressive

Meshes". Proc. Siggraph 1996, pp. 99-108.

This paper presents the progressive mesh (PM) representation, a new

scheme for stroing and transmitting arbitrary triangle meshes. This is

a lossless, continuous-resolution representation, and it addresses several

practical problems in graphics: smooth geomorphing of level-of-detail approximations,

progressive transmission, mesh compression, and selective refinement.

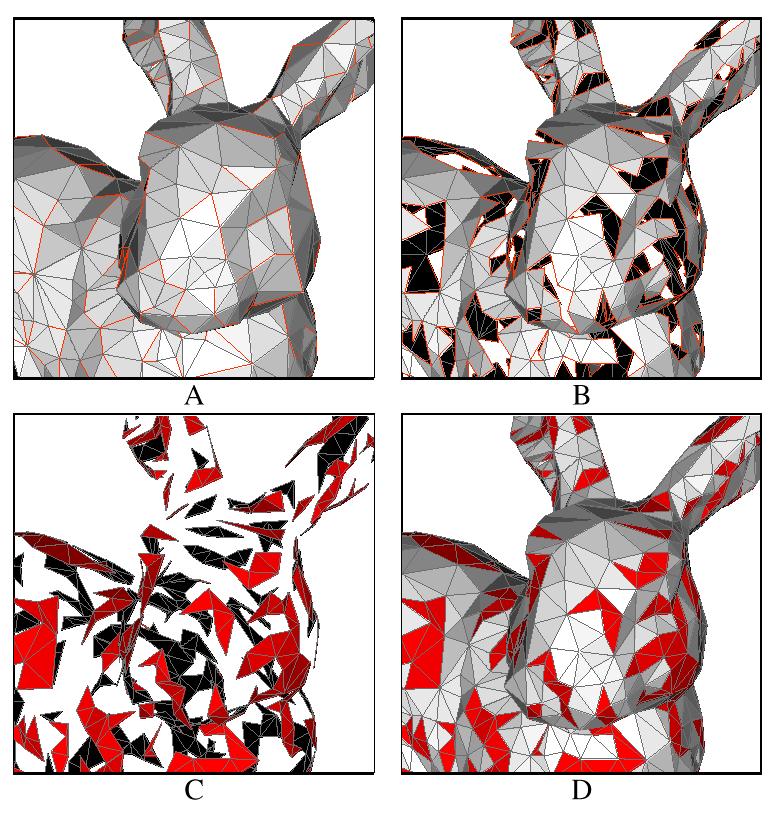

11. Andrei Khodakovsky, Peter Schröder, Wim Sweldens. "Progressive

Geometry Compression". Accepted by Siggraph 2000.

They propose a new progressive compression scheme for arbitrary topology,

highly detailed and densely sampled meshes arising from geometry scanning.

They observe that meshes consist of three distinct components: geometry,

parameter, and connectivity information. The latter two do not contribute

to the reduction of error in a compression setting. Using semi-regular

meshes, parameter and connectivity information can be virtually eliminated.

Coupled with semi-regular wavelet transforms, zerotree coding, and subdivision

based reconstruction they see improvements in error by a factor four (12dB)

compared to other progressive coding schemes.