(DRAFT) CS 348B - Computer Graphics: Image Synthesis Techniques

Homework 3 - Camera Simulation

Assigned Thursday, April 20. Due Sunday, May 2.

Updated 4/25

- New versions of existing scene files, linux Makefile, and additional scene

files and textures are available in the class directory. In addition,

binaries of a reference implementation are available for Linux RedHat 9.0 (realistic.so)

and Windows (realistic.dll); a Mac binary will be available shortly as

well. Please update your working version by copying /usr/class/cs348b/files/hw3 (ftp.stanford.edu/class/cs348b).

Make sure to back up your work first!

- Step 3 directions have been added below.

- The FAQ has been updated.

Description

Many rendering systems approximate the light arriving on the film plane by

assuming a pin-hole camera, which produces images where everything that is

visible is sharp. In contrast, real cameras contain multi-lens assemblies

with different imaging characteristics such as limited depth of field, field

distortion, vignetting and spatially varying exposure. In this assignment,

you'll extend pbrt with support for a more realistic camera model that

accurately simulates these effects.

Specifically, we will provide

you with data about real wide-angle, normal and telephoto lenses, each composed

of multiple lens elements. You will build a camera plugin for pbrt that

simulates the traversal of light through these lens assemblies.

With

this camera simulator, you'll explore the effects of focus,

aperture and exposure. You will empirically characterize the critical points of

the telephoto and normal lenses. Using these data you can optimize

the performance of your simulator considerably.

Step 0

You must have pbrt installed correctly, as in homework 1.

In addition, you should add . (the local directory) to your PBRT_SEARCHPATH.

Step 1

Re-read A Realistic

Camera Model for Computer Graphics by Kolb, Mitchell, and Hanrahan.

Step

2: Compound Lens Simulator

- Copy /usr/class/cs348b/files/hw3 to a directory at the same level as your

local pbrtsrc directory. Alternatively,

you can use anonymous ftp to get the files (ftp.stanford.edu/class/cs348b).

This directory consists of:

- A Makefile for Linux, and a Visual Studio 2003 project for Windows.

- A stub C++ file, realistic.cpp, for the code you will write.

- Seven scene files, which end in .pbrt (the same as the .lrt files that

have been used in previous assignments).

- Four lens files, which end in .dat.

- Binaries for a reference implementation of realistic.cpp (Linux RedHat

9.0 realistic.so; Windows realistic.dll; OSX implementation coming

soon).

- Various textures used by the scene files.

- Modify the stub file, realistic.cpp, to trace rays from the film plane

through the lens system supplied in the .dat files. The following is a

suggested course of action, but feel free to proceed in the way that seems

most logical to you:

- Build an appropriate datastructure to store the lens parameters

supplied in the

tab-delimited input .dat files. The format of the tables in these file is given in Figure 1of the Kolb

paper.

- Develop code to trace rays through this stack of lenses. Updated (4/22):

Please use a full lens simulation rather than the thick lens

approximation in the paper. It's easier (you don't have to

calculate the thick lens parameters) and sufficiently efficient for this

assignment.

- Write the RealisticCamera::GenerateRay function to trace randomly

sampled rays through the lens system. For this part of the

assignment, it will be easiest to just fire rays at the back element of

the lens. Some of these rays will hit the aperture stop and

terminate before they exit the front of the lens.

- Render images using commands such as 'pbrt dof-dragon.dgauss.pbrt'.

Decrease the noise (and increase the rendering time) by changing the

"integer pixelsamples" parameter in the scene files.

- Updated (4/25): You may compare your output against the

reference implementation, (realistic.so on Linux RedHat 9.0 and

realistic.dll on Windows; Mac implementation will be available shortly).

Sample Images:

- From left to right: telephoto, normal, wide angle and fisheye.

- Note that these were produced with 512 pixel samples rather than the

default 32.

- Notice that the wide angle image is especially noisy -- why is that?

Hint: look at the ray traces at the top of this web page.

Some conventions:

- Assume that the origin of the camera system is at the left-most element of

the stack (the point closest to the world).

- Assume that the 'filmdistance' parameter passed to the RealisticCamera

constructor is measured from the right-most element of the stack (the point

closest to the film).

- There is exactly one aperture stop per lens data file. This is the

entry with a radius of 0. Note that the diameter of this entry is the

maximum aperture of the lens. The actual aperture diameter to use is

passed in the scene file, and appears as a parameter to the RealisticCamera

constructor.

- In this assignment, everything is measured in millimeters.

Hints:

- ConcentricSampleDisk() is a useful function for converting two 1D uniform

random samples into a uniform random sample on a disk. See p. 270 of

the PBRT book.

- It may be helpful to decompose the lens stack into individual lens

interfaces and the aperture stop. For the lens interfaces, you'll need

to decide how to test whether rays intersect them, and how they refract

according to the change of index of refraction on either side (review

Snell's law).

- For rays that terminate at the aperture stop, return a dead ray (one where

mint > maxt, for instance) from the GenerateRay function.

- Be careful to weight the rays appropriately (this is represented by the

value returned from GenerateRay). You should derive the

weight from the integral equation governing irradiance incident on the film plane

(hint: in the simplest form, this equation contains a cosine term raised to the fourth

power). The exact weight will depend on the sampling scheme

that you use to estimate this integral. Make sure that your estimator

is unbiased if you use importance sampling! The paper also contains

details on this radiometric calculation.

- As is often the case in rendering, your code won't produce correct images

until everything is working just right. Try to think of ways that you

can modularize your work and test as much of it as possible incrementally as

you go. Use assertions liberally to try to verify that your code is

doing what you think it should at each step. Another suggestion would be to

produce a visualization of the rays refracting through your lens system as a

debugging aid (compare to those at the top of this web page).

Step 3: Exploring the way a camera works with your simulator

In this section you'll explore how various choices affect focus, depth of

field and exposure in your camera.

- The double gauss and telephoto lenses can be well approximated as a thick

lens. Your first task is to determine the critical points of these

lenses to characterize its thick lens approximation. Rather than

tracing single rays, as described in the paper, however, you will take

certain special pictures to do so. This will let you develop a better

intuition for the

way focus works in a camera. It's also the way you would characterize a camera

lens in the real world! The procedure is as follows:

- Take a picture that tells you the film-side focal point. The

basic idea is to take a picture of an object at infinity and find the

film plane distance that makes it sharp. The film plane's depth is

equal to the focal point. (Why is this?) We have provided

you with a scene file, infinity-test.pbrt, that will get you

started. This file renders an image of the focus target below at a

distance of 10m. You just have to choose and modify the film plane

that creates a sharp picture. Remember that the "filmdistance"

parameter specifies the depth of the film from the back (not the front!)

of the lens (see the conventions described in Step 2).

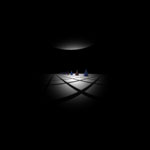

- Take a second picture with a film depth that gives roughly unit

magnification, and that shows you what the depth of the conjugate world

focus plane is. A very tedious way of doing this would be to

modify infinity-test.pbrt to put the target closer to the lens,

and find the film plane that makes it sharp; then modify the depth of

the target and film planes iteratively until you find unit

magnification. A better way is to use a depth

of field target, as you would in the real world. The idea here

is to fix a film plane, and take a picture of a ruler on a 45 degree

angle. If the ruler intersects the plane of focus, then the

sharpest point on the image of the ruler lets you deduce the depth of

the conjugate plane. We have created a file, dof_target.pbrt,

and a test scene, dof-test.pbrt, that includes this object.

The scene is set up so that when you take a picture of the ruler the

point of sharpest focus lets you read off the distance of the focal

point from the front of the lens. For example, the following image

shows that the focal point is between 70 and 80 mm of the front of the

ruler. The texture for the ruler is shown on the right.

Note that you may still have to move the target and/or the camera so

that a sharp point is within the camera's field of view. You will

also have to find the film depth that gives approximately unit

magnification.

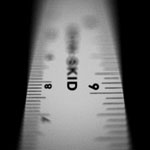

- From the procedures above you have measured or can calculate values

for all the variables in the following diagram of the thick lens

configuration:

D_X is the depth coordinate (measured) for the focal plane in the depth

of field test. D_F is the coordinate of the world-space focal

point. D_P and D_P' are the coordinates of the principal

planes. Note that depending on the lens, D_P' may actually be to

the left of D_P, contrary to what's shown in this diagram. D_F' is

the location of the image-space focal point and D_X' is the depth

coordinate of the film. By convention these coordinates are all

relative to the front element of the lens, which is not

shown. Note that the focal depth, F, is the same on both sides. Hint:

W and W' are simple functions of the focal depth, F, and the

magnification M. You measure M from the image

you generate from the depth of field test. This fact will be

needed when you solve for the values of the unknown variables.

- Compute the thick lens parameters for the normal and telephoto lenses.

How do they differ?

We will ask you to report values for these in your write-up.

You may like to discuss strategies to find the correct film plane

quickly, etc., but please don't discuss the actual values you compute

with other students.

- Investigate depth of field. Use the telephoto lens for this

test.

- Set up the camera so that it frames the depth of field target and is

focused at 1 meter. Now take two pictures, one with the aperture

set at the maximum radius, and another with it stopped down to 1/2 its

radius. How does the depth of field change?

- Now take two pictures with the aperture wide open. One should be

focused at 1 meter and the other at 2 meters. How does the depth

of field change?

- Investigate exposure. Take two pictures with the aperture full open

and half open. How does the exposure change? Does your ray

tracer output the correct exposure? Why or why not?

Step 4: Web page submission

- You will report your findings in a web page again. To hopefully make the write-up a bit easier, and

to make the formatting more

consistent, we have created a web page template where you can just fill in the

blanks.

- Copy the /usr/class/cs348b/files/hw3/submission directory to your

web-visible directory, and edit

index.html. The items that you have to replace are marked in green.

- Note: please do not include your source code on the web page

this time, or link to it. You will email it to us (see below).

- Though it is not a requirement, feel free to append a discussion of

anything extra or novel that you did at the end of your web page.

- Please send an email to cs348b-spr0304-staff@lists.stanford.edu

- Make the subject 'HW3 submission'. (Please don't send this email till

your web page is completely done, and please don't submit any other

emails with that subject title if you can help it. This helps us

keep everything in order.)

- In the body of your email, please include the URL

for your web page as the first line.

- Please attach to your email your source code, realistic.cpp, and your

final scene files for Step 3.

That's it!

FAQ

Please check this FAQ before sending questions to the staff email list.

We will update this list as common issues arise.

- Q: PBRT complains that it can't find realistic.dll or realistic.so.

What should I do?

A: Make sure that '.' (the local directory) is in your PBRT_SEARCHPATH

environment variable. In this assignment, you will compile a camera

plugin (realistic.so/dll) in your working directory. Note that the

path is ':' delimited on Linux and ';' delimited on Windows.

- Q: Should we be implementing the thick lens approximation described in the

paper or a full lens simulation?

A: Please implement the full lens simulation, which is less work for you and

only slightly less efficient computationally. Implementing the thick

lens approximation is a superset of the work, since you need the full lens

simulation in order to calculate the thick lens parameters.

- Q: Why are my images so noisy?

A: Integrating over the surface of the lens, especially for large apertures,

requires multiple samples for accuracy. Too few samples increases the

variance of the integral estimate, resulting in noisy images. The

problem is exacerbated in Step 2 by the fact that you will fire many rays at

the lens system that will not make it into the world because they will hit

the aperture stop. To drive down the noise, you can increase the

number of samples ("integer pixelsamples" parameter in the scene

file). In Step 4 of the system you will also explore optimizing the

sampling of the lens system by trying to fire only rays that will actually

make it through the lens. This will reduce the noise of your estimation and

help your approximation converge more quickly. If you formulate this

as a form of importance sampling, it will only reduce noise in your estimate

without introducing bias.

- Q: It takes a long time to render just one picture. It's going to

take forever to find the focal point using infinity-test.pbrt!

A: First, a hint: the focal point is between 60 and 70 mm for the

double gauss lens, and between 200 and 220 mm for the telephoto

lens.

The next consideration is to speed up your individual renders as much as

possible. Try rendering just the center portion of the film (the

bullseye target) and reducing the number of pixel samples. Finally,

try batch-rendering a series of images at different film depths within the

suggested range and iterating about the closest pair. These skills in

creating useful quick renders and batch jobs will hold you in good stead on

the final project.

Finally, if you want a more satisfying solution, you could create a modified

version of your camera plugin. This is by no means required for the

assignment. The idea would be to simulate a tilted film plane.

By rendering a single image with such a camera, you can read off the focal

depth by correlating the position of sharpest image focus with the

corresponding film depth. Such a tilted plane is available in

large-format view cameras, such as the one used by Ansel Adams. It is

practically useful in that you can create pictures where arbitrary planes

are in sharp focus -- not just planes that are perpendicular to the axis of

the lens as in traditional 35 mm cameras. For instance, you can take a

picture facing the horizon where everything on the ground plane is in

focus.

- Q: How can the value that you read off the depth of field target ruler be

equal to the depth? It's on a 45 degree angle!

A: Take a look at dof_target.pbrt. The ruler is scaled by

sqrt(2), to allow you to read off the depth in this convenient way.

- Q: What does it mean to "take a picture with a film depth that gives

roughly unit magnification"?

A: Magnification varies with the film depth, as shown in the diagram

above. A magnification of M means that lines 1 mm apart on the

world-space focal plane appear as M mm on the film plane. You should

measure the magnification on the images that you render. To do so, you

need to know the mm size of the film (defined as the "filmdiag"

parameter in the scene file), and it is useful to know that the width of the

ruler is 31.617 mm (see dof_target.pbrt).

Miscellaneous Data

You can replace the cones in the rendered scenes with the dragons shown in

Figures 6.6-6.9 in the PBRT book. Just download the dragon.pbrt scene from

/usr/class/cs348b/files/geometry, and then comment out the appropriate lines in

the hw3 directory scenes. Be warned that this requires a fair amount of

memory to render.

Grading

** The assignment passes all or almost all our tests.

* Substantial work was put in, but the assignment didn't pass all

our tests.

0 Little or no work was done on the assignment.

*** Extra credit may be given on a case-by-case basis for well done

extensions (for any part of the project) that produce superior results.

Copyright © 2004 Pat Hanrahan