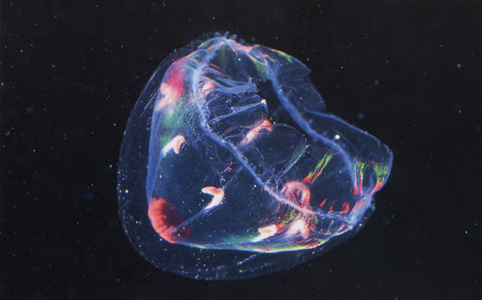

Bioluminescing Jellyfish, taken from Jellies: Living Art.

CS 348B Final Project:Jellyfish

By Brendan Dixon and Walter Shen

Our goal in this project was to render a bioluminescent jellyfish, similar to the one depicted below.

Bioluminescing Jellyfish, taken from Jellies: Living Art.

Our original proposal was to render a glow-in-the-dark bunny. The proposal can be found at http://www.stanford.edu/~potato/348Project/Glow.html.

Since our original proposal was turned down, we ended up getting a little bit of a late start since we needed to re-formulate a goal and an implementation that would lead to that goal. It was recommended to us that, since we liked glowing animals, we should attempt to render a bioluminescent jellyfish. This project would involve implementing volume rendering, which would allow us to scatter light through glowing and non-glowing parts of the jellyfish.

It took us approximately a week to gather papers, books, and other resources in an attempt to understand volume rendering and to find the optimal way to implement it. Some resources we consulted were Henrik Wann Jensenís Realistic Image Synthesis Using Photon Mapping and Nelson Maxís Optical Models forDirect Volume Rendering. We also spent a significant amount of time looking through the current volume rendering classes of LRT.

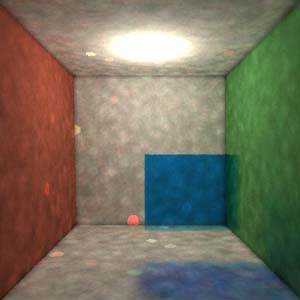

We used the cornell box with lrt's built-in homogeneous region

class to take our first look at volume

rendering. The box on the left absorbs red and green light.

The box on the right emits blue light.

When we actually started coding, we decided that we would try to take LRTís current volume rendering classes and extending on them. This involved building a new volume rendering class that would support absorption, emission, single-scatter, and multiple-scatter. (LRTís most complex volume rendering class currently only supports a scaled-down version of the first three.) Also, more complex volumes would need to be implemented as well. Our main volume would be a volume that would support NURBS. After some progress in this direction, we ended up deciding to abandon this direction in favor of another model that was inspired by Jensenís book, because we realized that it would be more efficient.

As a result, our goals were to (1) extend shapes in LRT to support both its current ability to handle surface interactions and the ability to handle interactions as volumes and (2) integrate volume rendering capabilities into the photon map. Brendan worked on part (1) and Walter worked on part (2), but we both also spent lots of time debugging the project as a whole after we put it together.

To extend the NURBS shape in LRT to support volumes, volume-related values such as sigma_a, sigma_s, g, and Le are stored as fields in the class, and accessor functions for those values as well as derived values like tau and sigma_t are added to the NURBS class. A mesh of triangles (resulting from the NURBS refine function) is also stored and used to calculate intersections and whether or not a point is inside the volume. An array of bounding boxes (one bounding box for each set of 30 or so triangles) is also stored to speed up the intersection tests (made rendering about 4x faster).

To integrate the volume rendering capability into the photon map classes, two main pieces were added. The first was a volume scattering map. This map collected interactions that resulted from multiple scatterings of light. Along with this scattering map, we made it so that light traveling through volumes would be absorbed and pick up particle emissions. The second piece was a ray marching algorithm during the rendering of the scene. The camera ray was traced to the back of the scene (to the first surface or the last intersection with a volume object if there was no surface). Then it was traced from the surface/last interaction back to the camera, utilizing ray marching as it passed through the volumes.

We encountered several other challenges that were not mentioned before. One of these was attempting to understand the jellyfish anatomy. There are many different kind of jellyfish with hard-to-distinguish body parts and it difficult to figure out whether the shining parts in their bodies were due to outside light (flash photography) or to bioluminescence. Another challenge we encountered was that it was very difficult to keep our versions of LRT in synch. There were several occasions during which even though we believed that we had the exact same versions of files, one personís LRT would render fine and others would not.

Some difficult bugs, some of which we didnít have time to resolve, came up involving the interaction between the volume-photon mapper and the NURBS-as-volume intersection method. Since the NURBS creates a triangle mesh to solve for intersections, the results of calling scene->Intersect often could be traced back to these triangles, which were not recognized as volumes. This often cause volumes to either go unrecognized or to be temporarily treated as surfaces (making them completely black).

Renderings from varous stages of our project are shown below. An interesting thing to note about a few of the images is that disabling the ďfinal gatherĒ option in the photon map actually led to effects that resembled the diffusion of light in water.

Here are our source files and .rib. Since we had bugs up to the very end and we were constantly modifying the same rib file, the code is not guaranteed work quite right or produce the intermediate results that we have below, but it should give a flavor of what we we're going for.

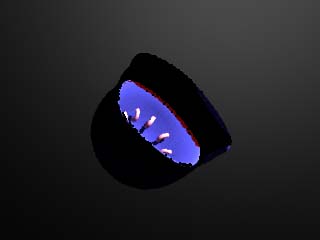

Here's a look at a solid version of our model and a cross section of it

showing some of the stuff we tried to

put inside. The picture on the left was one of our attempts at making the

outer nurbs surface into a volume

and rendering it with our volume-enabled photonmap, but bugs in the ray tracing

and intersection detection

made it come out strangly.

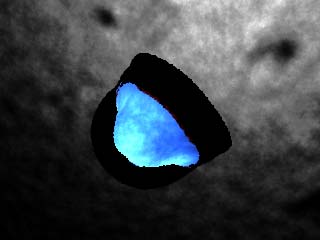

Here are some images from after we got the outer volume to let light

through, but there was still a bug which

caused the volumes to look black when they weren't in front of anything.

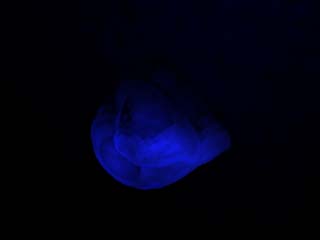

These, probably are best looking pictures, are the result of our project

when it was half-done and only the

NURBS-as-volume part was included, and we weren't using our own photonmap yet

to do the volume

rendering. Only absortion is happening here, so the things in the middle

aren't visible because the outer part

absorbs the red light and the middle parts are red.