Assignment 3: Camera Simulation

Due: Friday May 8th, 11:59PM

Please add a link to your final writeup on Assignment3Writeups.

Description

Most rendering systems generate images where the entire scene is in sharp focus, thus mimicking the effect of a pinhole camera. In contrast, real cameras contain multi-lens assemblies with different imaging characteristics such as limited depth of field, field distortion, vignetting and spatially varying exposure. In this assignment, you'll extend pbrt with support for a more realistic camera model that accurately simulates these effects. Specifically, we will provide you with descriptions of real wide-angle, normal and telephoto lenses, each composed of multiple lens elements. You will build a camera plugin for pbrt that simulates the traversal of light through these lens assemblies onto the film plane of a virtual camera. With this camera simulator, you'll explore the effects of focus, aperture and exposure. Once you have a working camera simulator, you will add simple auto-focus capabilities to your camera.

Step 1: Background Reading

Before beginning this assignment you should read the paper "A Realistic Camera Model for Computer Graphics" by Kolb, Mitchell, and Hanrahan. This paper is one of the assigned course readings. You should also review parts of Chapter 6 in pbrt.

Step 2: Getting Up and Running

Starter code and data files for Assignment 3 are located at http://graphics.stanford.edu/courses/cs348b-07/assignment3/assignment3.zip.

Step 3: Setup the Camera

Notice that in this assignment the pbrt scenes specify that rendering should use the "realistic" camera plugin that you are implementing in this assignment. The realistic camera accepts a number of parameters defined in the pbrt scene file. The most important of these parameters include: the name of a lens data file, the distance between the film plane and the location of the back lens element (the one closest to the film), the diameter of the aperture stop, and the length of the film diagonal (distance from top left corner to bottom right corder of film). The values of these parameters are passed in to the constructor of the RealisticCamera class. All values in both the pbrt file and in the lens data file are in units of millimeters.

Camera "realistic"

"string specfile" "dgauss.50mm.dat"

"float filmdistance" 36.77

"float aperture_diameter" 17.1

"float filmdiag" 70

The .dat files included with the starter code describe camera lenses using the format described in Figure 1 of the Kolb paper. Your RealisticCamera constructor should read the specified lens data file. In pbrt, the viewing direction is the positive z-direction in camera space. Therefore, your camera should be looking directly down the z-axis. The first lens element listed in the file (the lens element closest to the world, and farthest from the film plane) should be located at the origin in camera space with the rest of the lens system and film plane extending in the negative-z direction.

Each line in the file contains the following information about one spherical lens interface.

lens_radius z-axis_intercept index_of_refraction aperture

More precisely:

lens_radius: is the spherical radius of the element.

z_axis_intercept: is thickness of the element. That is, it's the distance along the z-axis (in the negative direction) that seperates this element from the next.

index of refraction: is the index of refraction on the camera side of the interface.

aperture: is the aperture of the interface (rays that hit the interface farther than this distance from the origin don't make it through the lens element)

Note that exactly one of the lines in the data file will have lens_radius = 0. This is the aperture stop of the camera. It's maximum size is given by the aperture value on this line. It's actual size is specified as a parameter to the realistic camera via the pbrt scene file. Also note that the index of refraction of the world side of the first lens element is 1 (it's air).

Step 4: Generate Camera Rays

You now need to implement the RealisticCamera::GenerateRay function. GenerateRay takes a sample position in image space (given by sample.imageX and sample.imageY) as an argument and should return a random ray from the camera out into the scene. To the rest of pbrt, your camera appears as any other camera. Given a sample position it returns a ray from the camera out into the world.

Compute the position on the film plane the ray intersects from the values of sample.imageX and sample.imageY

- The color of a pixel in the image produced by pbrt is proportional to the irradiance incident on a film pixel (think of the film as a sensor in a digital camera). This value is an estimate of all light reaching this pixel from the world and through all paths through the lens. As stated in the paper, computing this estimate involves sampling this set of paths. The easiest way to sample all paths is to fire rays at the back element of the lens and trace them out of the camera. Note that some of these rays will hit the aperture stop and terminate before exiting the front of the lens.

GenerateRay returns a weight for the generated ray. The radiance incident along the ray from the scene is modulated by this weight before adding the contribution to the Film. You will need to compute the correct weight to ensure that the irradiance estimate produced by pbrt is unbiased. That is, the expected value of the estimate is the actual value of the irradiance integral. Note that the weight depends upon the sampling scheme used.

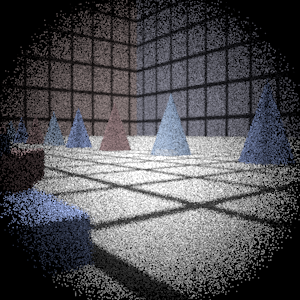

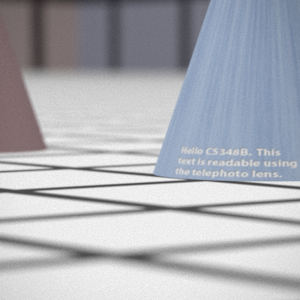

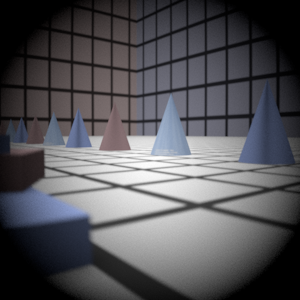

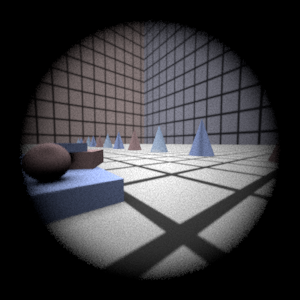

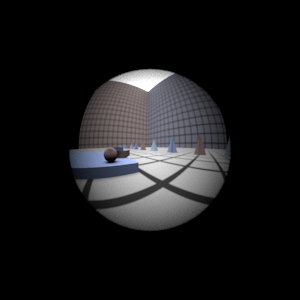

Render each of the four scenes (hw3_dgauss.pbrt, hw3_wide.pbrt, hw3_fisheye.pbrt, hw3_telephoto.pbrt) using your realistic camera simulator. Example images rendered with 512 samples per pixel are given below: From left to right: telephoto, normal, wide angle and fisheye. Notice that the wide angle image is especially noisy -- why is that? Hint: look at the ray traces at the top of this web page.

4 samples per pixel

512 samples per pixel

Hints

ConcentricSampleDisk() is a useful function for converting two 1D uniform random samples into a uniform random sample on a disk. See p. 270 of the PBRT book.

- You'll need some data structure to store the information about each lens interface as well as the aperture stop. For each lens interface, you'll need to decide how to test for intersection and how rays refract according to the change of index of refraction on either side (review Snell's law).

- For rays that terminate at the aperture stop, return a ray with a weight of 0 -- pbrt tests for such a case and will terminate the ray instead of sending it out into the scene.

- Pay attention to the coordinate system used to represent rays. Confusion between world space and camera space can be a major source of bugs.

- As is often the case in rendering, your code won't produce correct images until everything is working just right. Try to think of ways that you can modularize your work and test as much of it as possible incrementally as you go. Use assertions liberally to try to verify that your code is doing what you think it should at each step. It may be worth your time to produce a visualization of the rays refracting through your lens system as a debugging aid (compare to those at the top of this web page).

Step N: Submission

We've created wiki pages (FirstnameLastname/Assignment3) for all students in the class. Access to these pages is set up so that only you can view your page. Please compose your writeup on this page and link to it from the Assignment3Writeups page.

Foo Note that you can link to the images to display them on your wikipage using attachment:filename.

- Bar

Grading

This assignment will be graded on a 4 point scale:

- 1 point:

- 2 points:

- 3 points:

- 4 points: