BundleFusion: Real-time Globally Consistent 3D Reconstruction using Online Surface Re-integration

Stanford University

2Max Planck Institute for Informatics

3Microsoft Research

Abstract

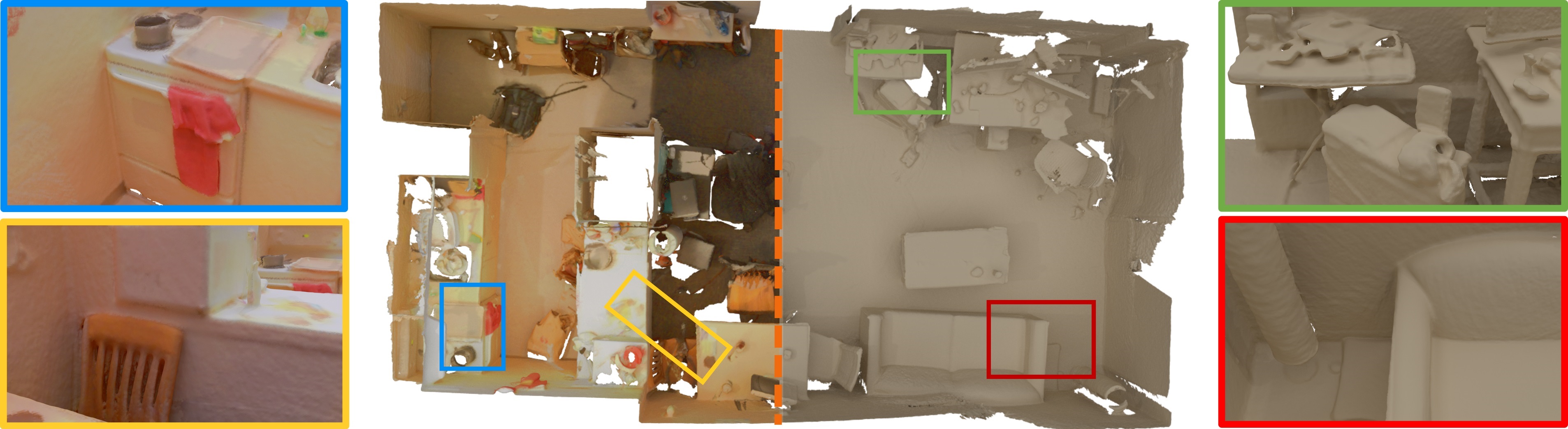

Real-time, high-quality, 3D scanning of large-scale scenes is key to mixed reality and robotic applications. However, scalability brings challenges of drift in pose estimation, introducing significant errors in the accumulated model. Approaches often require hours of offline processing to globally correct model errors. Recent online methods demonstrate compelling results, but suffer from: (1) needing minutes to perform online correction preventing true real-time use; (2) brittle frame-to-frame (or frame-to-model) pose estimation resulting in many tracking failures; or (3) supporting only unstructured point-based representations, which limit scan quality and applicability. We systematically address these issues with a novel, real-time, end-to-end reconstruction framework. At its core is a robust pose estimation strategy, optimizing per frame for a global set of camera poses by considering the complete history of RGB-D input with an efficient hierarchical approach. We remove the heavy reliance on temporal tracking, and continually localize to the globally optimized frames instead. We contribute a parallelizable optimization framework, which employs correspondences based on sparse features and dense geometric and photometric matching. Our approach estimates globally optimized (i.e., bundle adjusted poses) in real-time, supports robust tracking with recovery from gross tracking failures (i.e., relocalization), and re-estimates the 3D model in real-time to ensure global consistency; all within a single framework. We outperform state-of-the-art online systems with quality on par to offline methods, but with unprecedented speed and scan completeness. Our framework leads to as-simple-as-possible scanning, enabling ease of use and high-quality results.

Paper | Dataset | BibTeX citation | Slides | Source Code

Acknowledgements

We would like to thank Thomas Whelan for his help with ElasticFusion, and Sungjoon Choi for his advice on the Redwood system.We provide a dataset containing RGB-D data of 7 large scenes (60m average trajectory length, 5833 average number of frames). The RGB-D data was captured using a Structure.io depth sensor coupled with an iPad color camera. Please refer to the respective publication when using this data.

Format

Each sequence contains:- Color frames (frame-XXXXXX.color.jpg): RGB, 24-bit, JPG

- Depth frames (frame-XXXXXX.depth.png): depth (mm), 16-bit, PNG (invalid depth is set to 0)

- Camera poses (frame-XXXXXX.pose.txt): camera-to-world (invalid transforms -INF)

- Camera calibration (info.txt): color and depth camera intrinsics and extrinsics.

License

The data has been released under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 License.

apt0 |

640x480 color640x480 depth |

apt0.zip (1.1GB)apt0.plyapt0.sens |

|

apt1 |

640x480 color640x480 depth |

apt1.zip (1.1GB)apt1.plyapt1.sens |

|

apt2 |

640x480 color640x480 depth |

apt2.zip (1.1GB)apt2.plyapt2.sens |

|

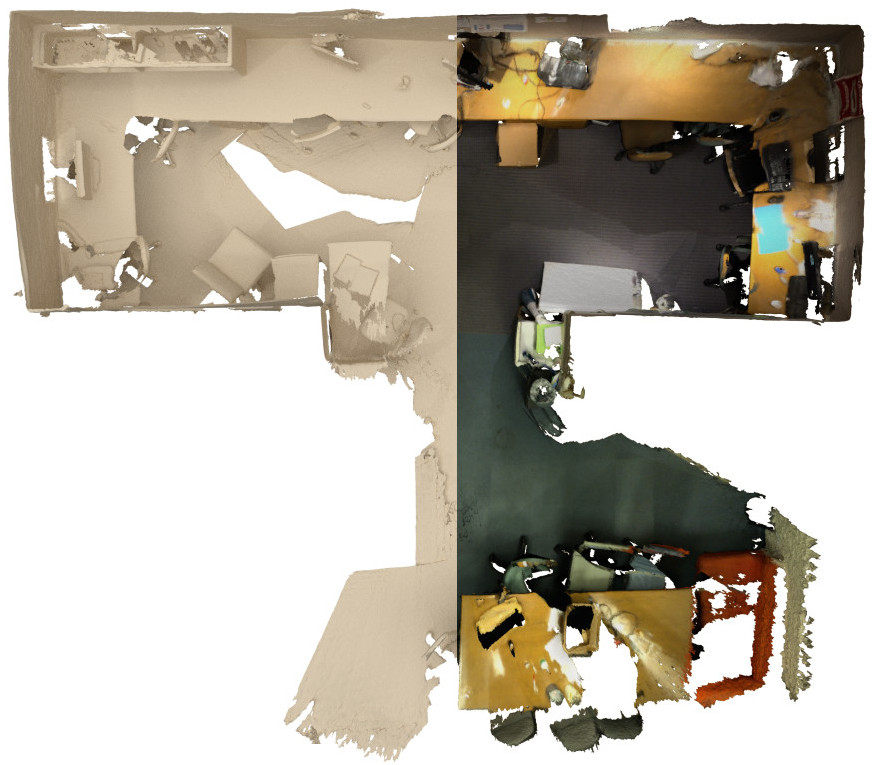

copyroom |

640x480 color640x480 depth |

copyroom.zip (520MB)copyroom.plycopyroom.sens |

|

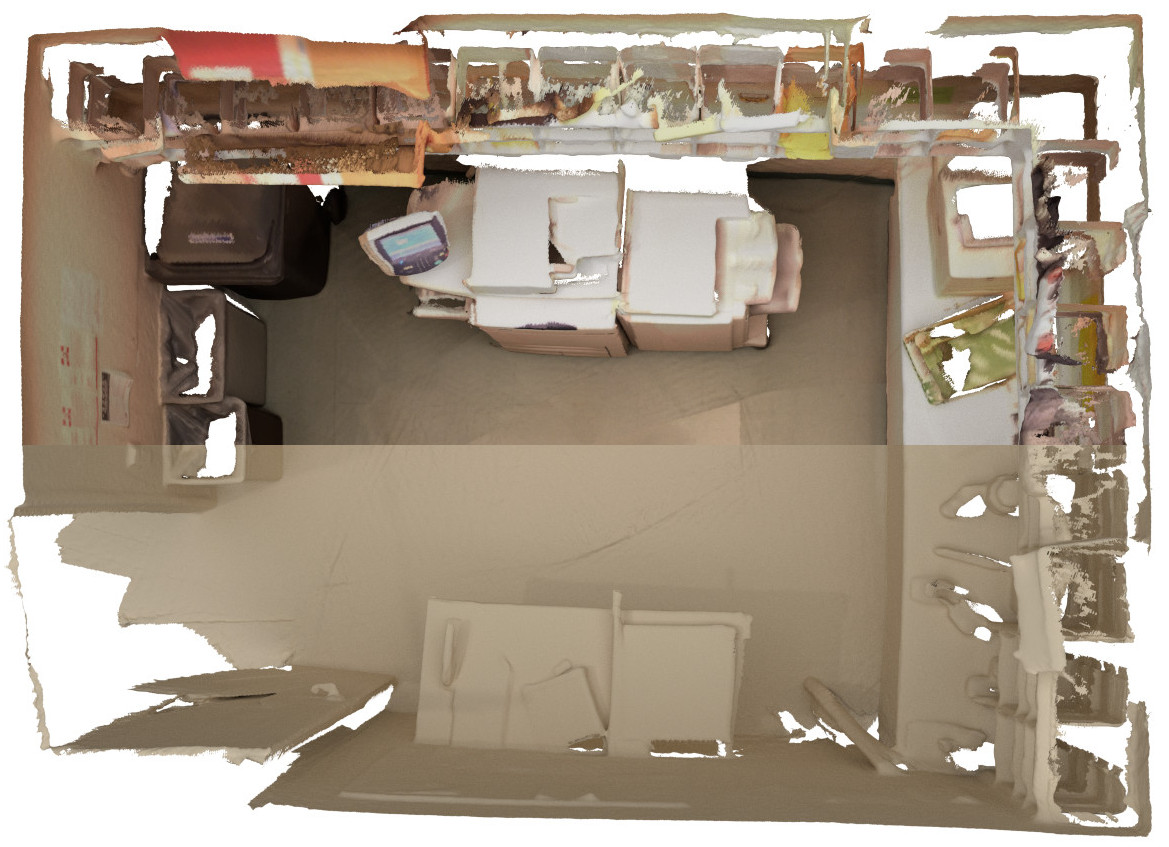

office0 |

640x480 color640x480 depth |

office0.zip (800MB)office0.plyoffice0.sens |

|

office1 |

640x480 color640x480 depth |

office1.zip (900MB)office1.plyoffice1.sens |

|

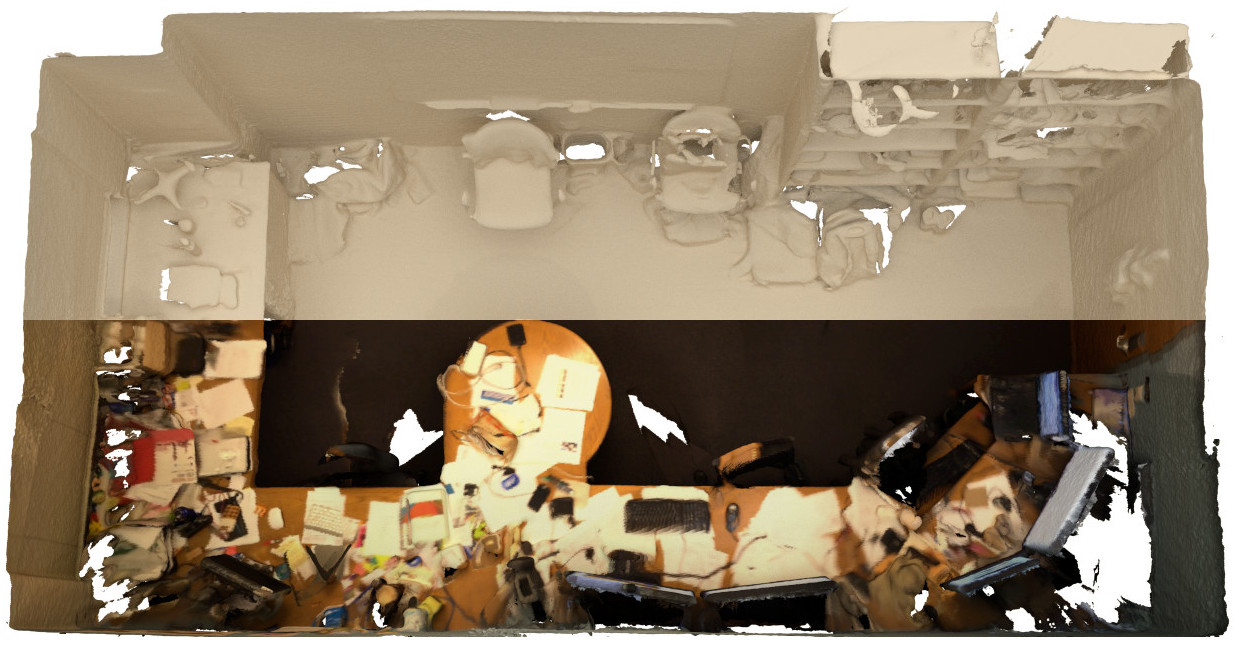

office2 |

640x480 color640x480 depth |

office2.zip (550MB)office2.plyoffice2.sens |

|

office3 |

640x480 color640x480 depth |

office3.zip (460MB)office3.plyoffice3.sens |

|