Assignment 5 - High Dynamic Range Photography

Due Date: Thursday February 22nd, 11:59PM

Questions? Check out the Assignment 5 FAQ and discussion page.

The Assignment 5 Camera Signup Page

Look near the bottom of the page for sample results of the church photo stack.

In this assignment you'll explore high dynamic range photography. You'll take your own photos, generate a high dynamic range image from them, and explore a couple of ways of displaying HDR images on low dynamic range displays. There are many steps to this assignment, but they are essentially independent. We list the steps in the logical order, but you may want to do later, simpler steps first to help your understanding. Don't feel overwhelmed by the number of steps, each one is relatively short and the entire assignment can be done in about 100 lines of code.

Steps

Download and build the starter code

Begin by downloading the Assigment 5 starter code and latest version of the libST source here. Note that you must use the new version of libST, it has been updated since the previous assignment.

- Build libST. Building libST should require the same steps on your development system as it did in previous assignments. A number of changes have been made to the code which are significant for this assignment. See the descriptions below for the full details of the changes, which you should look over and understand at a high level.

Build the assignment 5 starter code. The subdirectory /assignment5 contains the starting code for your project. This directory should contain the C++ source file hdr.cpp. You will provide us your modified version of hdr.cpp as part of this assignment's handin. The directory also response.h, response.cpp, and some support code in the directory /tnt. You should understand the interface provided in response.h and response.cpp since you will use it in your code. You are not responsible for any of the /tnt code. Finally, we've provided d40.cr, a response curve file for the Nikon D40. If you use our cameras this file will work, but if you have your own camera you must generate your own camera response curve. See the instructions below for how to do that.

If you want to start working on the assignment before you get to take your own photos, download the sample photos and hdr images from here (small version). Another HDR stack can be found here (small version), but note that the images aren't stable. However, you should still be able to generate HDR images from them, albeit a bit blurry. Note that part of the assignment, and your grade, is taking your own photos. We are only providing these so you can get started sooner.

Understanding Changes to libST

There have been a few changes to libST, but you are already familiar with much of the code.

- STColor - This class has been updated with many overloaded operators and a couple of helper functions. Be familiar with what's available here. This is the representation we use for pixels in an HDR image, so most of your code will deal with this class.

STHDRImage - This class is analogous to the STImage class and has almost equivalent functionality. The main difference is that each pixel is an STColor. STColors are floating point and can take on any valid float value. However, when displayed, they will be clamped to [0, 1]. We've added an operator()(int x, int y) so accessing pixels uses the simple notation hdrimage(x,y) (which returns an STColor&). Note the same operator has been implemented for STImage.

Review of Camera Imaging and HDR

The input to a camera is the radiance of the scene. Light enters the camera, passes through the lens system and aperture producing some irradiance Ei at each pixel i. The camera can control how much energy actually hits the sensors by increasing/decreasing the shutter speed. By integrating the irradiance Ei over this shutter time dt we get the sensor exposure, Xi. The many steps from this point to producing an actual image (film exposure, development, digital conversion, remapping) act as a non-linear function which maps these input exposures in the range [0,infinity], to image values Zi in the range [0,255]. This function f(Xi) = Zi is called the camera response curve.

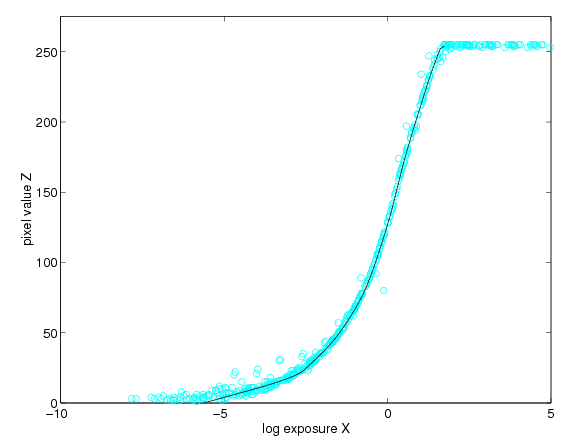

Our goal is to use some output images to get the input Xi values (and the Ei values). Therefore, we actually want to consider the inverse function f-1(Zi) = Xi. By substitution we get f-1(Zi) = Ei*dt. Taking the natural logarithm we have ln(f-1(Zi)) = ln(Ei) + ln(dt). We'll rename this function g(Zi) = ln(Ei) + ln(dt). This is the representation we'll use for the response curve (given a pixel value, what is the ln(exposure) ). Note that this represents exactly the same information, we've just adjusted the representation a bit. This is convenient for our purposes because different shutter speeds are equivalent to translations of this function.

Here we show a sample response curve.

Answer These Questions

(Please include answers to the following with your handin)

Answer these questions before doing the assignment:

- What response do we get for exposure values beyond the ends of the response curve? What problem does this cause if we want to use the inverse?

- Why do we have to tone map HDR images before displaying them? What would they look like if we didn't?

Answer this question after doing the assignment:

- How well does the tone mapping work? Can you see everything you think you should be able to? Do you notice anything this tone mapping algorithm doesn't handle well?

Taking Photographs

We've purchased 2 Nikon D40 digital SLR cameras for use in this assignment. These cameras have manual control settings so you can fix the aperture and control the shutter speed. You'll want to work in pairs or groups of 3. Everybody must take their own photos, but working in groups makes it easier to figure out how to adjust the settings if you haven't used this type of camera before and work through any unexpected problems together. You can check out the cameras for 2 hours at a time. Think about where you're going to take your photos before picking up the camera.

If you have your own camera which provides sufficient control (i.e. you can fix the aperture, control shutter speed, and turn any extra processing such as white balance off), then you can use your own camera. Note that you'll probably also need a tripod to keep your shot steady. I've successfully created an HDR image without using a tripod but the results are noticeably blurry. In that case you'll need to recover the response curve for your camera. This can be done with the same set of images you take to create the HDR image, just follow the instructions listed below.

Remember that your scene must be still while taking the pictures. Some small movements (trees swaying a bit) can be tolerated and generally shouldn't cause a problem. But trying to take pictures in the quad during midday is going to be difficult.

Remember that the entire point of HDR images is that the scene you're photographing has high dynamic range, so make sure you have both relatively dark and bright regions. Pictures of sunset work very well, especially with some clouds. Be creative, just be sure your scene actually requires multiple exposures to recover the HDR image.

Once you've found your scene experiment with a couple of sample photos with various aperture and shutter speed settings. Once you settle on an aperture and focus, do not change them while taking your sequence of photos. The number of photographs you need and the shutter speeds to use will vary depending on the dynamic range of your scene. A good way to ensure good results is to make sure all pixels have at least one photo in which its response is in the range [64,192]. This means you want at least one with shutter speed fast enough that the scene is almost invisible - only the brightest objects are at half the brightest response. And you want one with shutter speed long enough that the image looks almost entirely washed out - only the darkest areas aren't white but are not black either. The number of photos in between is harder to determine, but increasing by 1 stop for each photo seems to work well.

You should keep track of the shutter speeds while taking the photos, but you can also retrieve the information from the JPEG's EXIF data. Once you have all your photos you can construct a photo stack list file using the format described below.

A note on the shutter speeds. It seems the reported speeds aren't always accurate. Specifically, if the shutter speed looks like it could be a power of 2, it probably is. For example, the 30 second exposure setting is actually 32 seconds long, 15 second exposure is actually 16 seconds.

File Formats Used In This Assignment

You should only need to edit the photo stack list files, but here's the information about all the formats used:

Photo Stack Lists are stored in .list files. Each line of this file is of the form <numerator> <denominator> <filename>. The first two parameters give the shutter time in seconds. The last parameter is the name of the image file. Anything STImage supports will work here, presumably you'll be using JPEGs.

Camera response curves are stored in .cr files. The file format is just a text dump of the 256 curve values, one for each color. See the CameraResponse class for details.

HDR Images use the Portable FloatMap (PFM) file format. This is an extremely simple format and doesn't support compression, so resulting files can be very large.

Optional: Recovering the Camera Response Curve

If you're using your own camera you'll need to recover the camera response curve. If you're using the D40s, you do not need to perform this step, we've already provided d40.cr with this curve.

We've provided the code for this step. Once you've compiled the skeleton code and have your photo stack list finding your camera response curve can be done using the following command:

hdr -response photos.list cameraresponse.cr

which takes the list of photos in photos.list and generates the cameraresponse.cr file. This is accomplished by solving a linear least squares problem. Its a good idea to open and plot the response curve in Excel to make sure your curve looks right and is smooth enough.

Creating an HDR Image

An HDR image is just the irradiance values Ei at each pixel due to the scene. In other words, an HDR image represents the actual power incident on each sensor, before any of the other effects of the camera after the lens and aperture, have taken place. Assume we have the g(Zi) representation of the response curve and just a single input image with known shutter speed dt. Then we could simply rearrange the response curve formula to find Ei:

g(Zi) = ln(Ei) + ln(dt)

ln(Ei) = g(Zi) - ln(dt)

Ei = exp( g(Zi) - ln(dt) )

So why can't we just use a single photograph? The response curve can't give pixel values less than 0 or greater than 255, so all exposures above a certain level will result in a response of 255 and similarly for exposures below a certain level. This means that multiple exposure values map to the same response pixel value, so the function cannot actually be inverted. Therefore the above equations cannot determine the actual exposure value if the input pixel value is too small or too large.

So we need enough photographs such that every pixel is within a usable range in at least one photo (best if its, e.g., in [64,192]). Using this method, j photos will result in j exposure estimates for each pixel (that is, we have Eij = exp( g(Zij) - ln(dt_j) ) for each pixel i in each image j). Then we need to combine them somehow. To do this we'll weight them so that Eij will have high weight if pixel Zij has a high confidence value, i.e. those in the middle of the response curve. We've already created a weight function for you (CameraResponse::Weight()) which accomplishes this. Then we just calculate a weighted average (which we do on the ln values, not the actual values):

ln (Ei) = sum_over_j( weight(Zij)*(g(Zij) - ln(dt_j)) ) / sum_over_j( weight(Zij) )

and the Ei can easily be found.

You should implement this function in recover_hdr(). Only the arguments to the function are necessary to accomplish this. Carefully read the comments for the functions of the CameraResponse class to make sure you're getting the values you expect (i.e. whether you're getting the value itself or ln(value)).

You can perform this process by running

hdr -create photos.list response.cr photo_out.pfm

which will take the file photos.list and the response curve response.cr and create the HDR image and save it to photo_out.pfm. Note that pfm files are uncompressed, so for high resolution images such as those from cameras, the file will be quite large (close to 100 megabytes).

Taking a Virtual Photograph

Given an HDR image and a camera response we can actually find the image that would be generated by that camera. This is a straightforward use of the response curve. For some shutter speed dt, we find the exposure for each pixel and look up the response. We've given you a function in CameraResponse to lookup the response for some exposure, so this should be simple to implement.

The camera response curve we use doesn't necessarily need to be the one the original photographs were taken with. You can actually find out what the same image, taken with different imaging systems, would look like!

Implement this process in the function virtual_photo(). When you run the program with

hdr -vp photo.pfm response.cr

it will display calculate and display virtual photographs. You can increase/decrease the shutter speed using +/-. It increments by 1/3 stop. Hitting s will save the current virtual photograph to vp.jpg.

Displaying an HDR Image: Linear Mapping

In order to display an HDR image we need to map it to a low dynamic range display. Linear mapping is the simplest approach and simply scales and clamps the HDR values so a certain range is displayed and any values outside that range are clamped to the maximum LDR value. Implement this approach in scale_hdr so that [0,max_val] in the HDR image maps to [0,255] in the LDR image.

When you run the program with

hdr -view photo.pfm

it will use this display scheme. Use +/- to increase/decrease the range of values that are scaled to the range [0,255]. Hitting s will save the current image to view.jpg.

Displaying an HDR Image: Tone Mapping

After implementing and experimenting with the the linear mapping you'll realize that this is not a very effective way of displaying HDR images. A non-linear mapping from the HDR values to the LDR values can show detail at more levels. Here we'll describe a very simple tone mapping algorithm which is pretty effective. Your job is to implement it.

This algorithm is based on the key of a scene. The key of a scene indicates whether it is subjectively light, normal, or dark. High-key scenes are light (think of a white room snow), normal-key scenes are average, and low-key scenes are dark (think of night shots). This algorithm uses the log-average luminance as an approximation to the key of the scene. The log average luminance is defined as

Lw_avg = exp( sum_over_i( log(delta + Lw_i) ) / N )

where i indexes pixels, Lw_i is the luminance of the pixel, and N is the number of pixels. delta is a small value which ensures we don't ever take the log of 0. We then scale all pixels Ei in the HDR image using this value and a user defined key setting, a:

Ei_scaled = (a / Lw_avg) * Ei

So far all we've done is scale the values. We now need to compress all possible values into the range [0,1]. To do this we do the following

Ci = Ei_scaled / (1 + Ei_scaled)

When Ei is 0 Ci is also 0. As Ei gets larger, Ci gets larger as well, but its upper limit is 1. Finally we can scale this to the range for STPixels and store it in the output image.

Code this algorithm in the tonemap() function.

When you run the program with

hdr -tonemap photo.pfm

it will use this display scheme. Use +/- to increase/decrease the key value. Hitting s will save the current tonemapped image to tonemapped.jpg.

Hints for Getting Started

- Note that this program only works with command line arguments - you must specify the function you want to do and its parameters. You should know from the last assignment how to change the command line arguments in your IDE.

- The files your dealing with are relatively large. If you use enough shots they can't all fit in memory at once. Make sure you write your code such that only 1 image from disk is in memory at once.

- Running with Debugging can be painfully slow for large images such as those you'll get from the camera. We're providing some pfm files for testing the second part of the assignment but when you're confident of your implementation of a function you'll want to run it in Release mode when possible.

- You'll probably want to scale down your images for testing. Doing so will make your debug cycle go much faster.

Sample Results

You can find some sample results from the church photo stack here. The image filenames specify the mode and the number of +/- steps taken to obtain the image.

Grading

- 1 stars - Took HDR photo stack

- 2 stars - Took HDR photo stack + generated HDR image

- 3 stars - Took HDR photo stack + generated HDR image + virtual photographs + linear mapping view

- 4 stars - Took HDR photo stack + generated HDR image + virtual photographs + linear mapping view + tone mapping

Submission Instructions

We would like submission to be in the form of a single .zip archive. This archive should contain your modified version of hdr.cpp and a text file containing answers to assignment questions. Please email this zip file to cs148-win0607-staff@lists.stanford.edu before the deadline. Please make the subject line of this email "CS148 Assignment 5 Handin".

Please DO NOT include your photo stack in this zip file. Please post a zip file containing your photo stack on the web or on the course wiki. REMEMBER, the stack needs to include both your images and the text file listing their corresponding shutter speeds. In your handin email give as a url to this zip file containing your photo stack.