Non-Euclidean Methods in Machine Learning

“Data has Shape and Shape has Meaning”

“I am just not interested in ad-hoc solutions invented by clever people. I want a method that works for lots of problems. I am not looking for hundreds of keys to solve these problems. I am looking for the skeleton key that opens many doors.” -- Andrew Vazsonyi, otherwise known as Weiszfeld

Welcome to the Fall 2020 course website for Non-Euclidean Methods in Machine Learning (CS468), Stanford University, Department of Computer Science and Geometric Computing Group.

What Will We Cover?

Riemannian Geometry: It all started on 10th of June 1854, when Bernhard Riemann (1826 - 1866) gave his famous ”Habilitationsvortrag” in the Colloquium of the Philosophical Faculty at Göttingen. His talk ”Uber die Hypothesen, welche der Geometrie zu Grunde liegen” (On the Hypotheses which lie at the Foundations of Geometry) impressed many, including his 77-year-old advisor Carl Friedrich Gauss. Riemann’s revolutionary ideas generalized the geometry of surfaces and led to an exact definition of the modern concept of an abstract Riemannian Mannigfaltigkeit, the manifold. The study of manifolds combines many important areas of mathematics: it generalizes concepts such as curves and surfaces as well as ideas from algebra and topology. In English, "manifold" refers to spaces with a differentiable or topological structure, while "variety" refers to spaces with an algebraic structure, as in algebraic varieties. Bernhard Riemann extended Gauss's theory to higher-dimensional spaces called manifolds in a way that also allows distances and angles to be measured and the notion of curvature to be defined. Furthermore, these definitions are intrinsic to the manifold and do not depend upon its embedding in some higher-dimensional space. Albert Einstein used the theory of Riemannian manifolds to develop his general theory of relativity which is the geometric theory of gravitation published in 1915. In this course we will present how this theory can be applied to machine learning in the broad sense and in computer vision as a specific application area. The focus is on learning the fundamentals and applying the theory to solve challenging real problems.

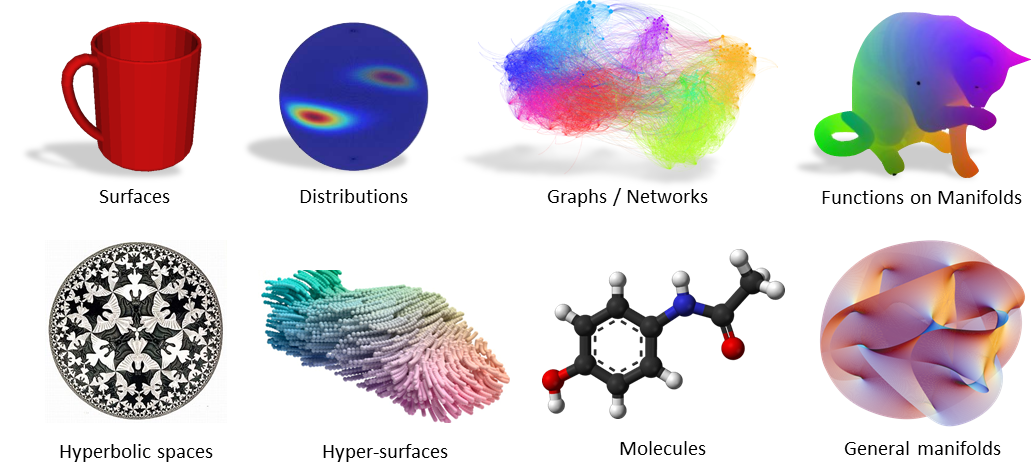

Non-Euclidean Machine Learning: We understand the world by interaction with the bodies we observe. This Kantian empirical realism called experience is made possible by the a priori Euclidean constraints on space. While being subject to limits of scales and tolerances of our senses, such a flat view of the world has been the driving force in many fields laying the foundations of the first AI systems. Recent progress is challenging this perspective by showing that the world around us as well as the answers we are looking for admit a non-Euclidean, curved structure. Hence, it becomes desirable for our machine learning models to adapt naturally. In this course, we cover the nuts and bolts of learning non-Euclidean embeddings connecting non-Euclidean domains and parameter spaces. We will apply optimization techniques from Riemannian geometry, bring in knowledge from graph theory and present novel developments in neural networks that are suited to data living on lower dimensional manifolds.

What We Will Not Cover: No Ricci flows, Poincare conjectures, fibre bundles or homology. We will also not cover the general learning on manifolds that are not smooth or differentiable. Due to the involved material, we will try to keep the course as comprehensible as possible.

Important information related to CS468 will be posted on this website including:

The CS 468 course staff wish to thank the Computer Forum for their support.