Database-Assisted Object Retrieval for Real-Time 3D Reconstruction

Stanford University

Eurographics 2015

Eurographics 2015

Abstract

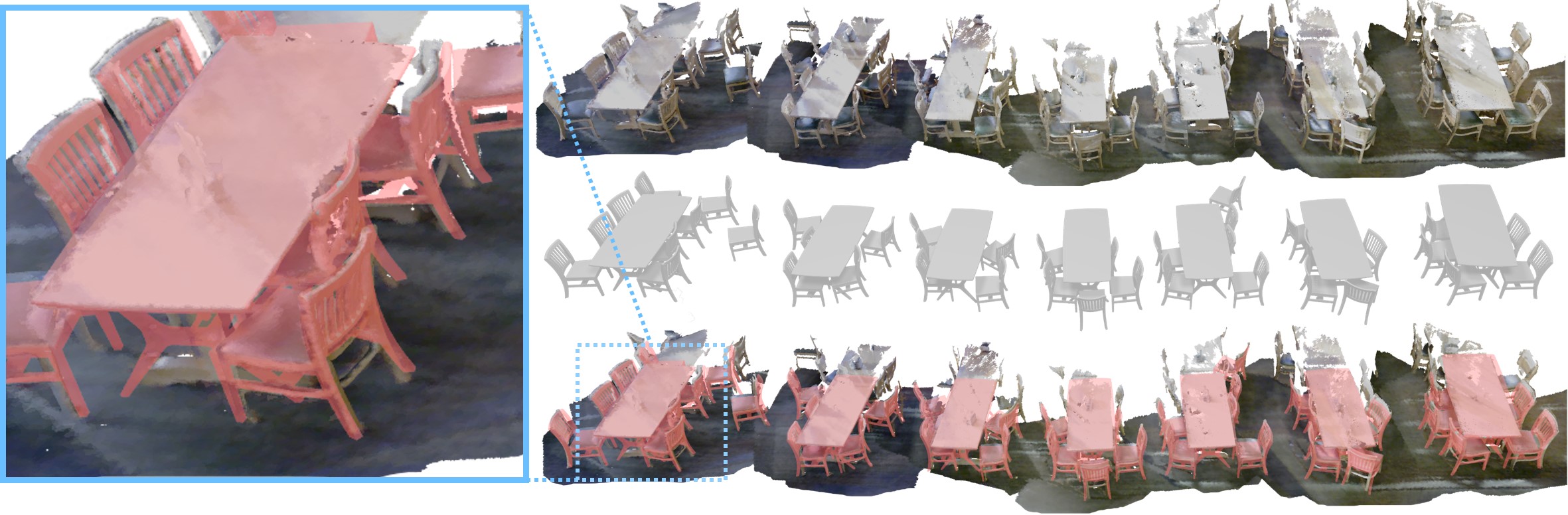

In recent years, real-time 3D scanning technology has developed significantly and is now able to capture large environments with considerable accuracy. Unfortunately, the reconstructed geometry still suffers from incompleteness, due to occlusions and lack of view coverage, resulting in unsatisfactory reconstructions. In order to overcome these fundamental physical limitations, we present a novel reconstruction approach based on retrieving objects from a 3D shape database while scanning an environment in real-time. With this approach, we are able to replace scanned RGB-D data with complete, hand-modeled objects from shape databases. We align and scale retrieved models to the input data to obtain a high-quality virtual representation of the real-world environment that is quite faithful to the original geometry. In contrast to previous methods, we are able to retrieve objects in cluttered and noisy scenes even when the database contains only similar models, but no exact matches. In addition, we put a strong focus on object retrieval in an interactive scanning context --- our algorithm runs directly on 3D scanning data structures, and is able to query databases of thousands of models in an online fashion during scanning.

Paper HQ | Paper LQ | 3D Scene Dataset | BibTeX citation | Video | Slides

We provide a dataset containing RGB-D data of a number of large, cluttered scenes, for the task of object recognition. Each scene contains color and depth images, along with the camera trajectory. All scenes were recorded from Kinect/PrimeSense sensors at 640x480 resolution. Please refer to the respective publication when using this data.

Format

For each scene, we provide a zip file containing a sequence of tracked RGB-D camera frames. We use the VoxelHashing framework for camera tracking and reconstruction. Each sequence contains:- Color frames (frame-XXXXXX.color.png): RGB, 24-bit, PNG

- Depth frames (frame-XXXXXX.depth.png): depth (mm), 16-bit, PNG (invalid depth is set to 0)

- Camera poses (frame-XXXXXX.pose.txt): camera-to-world

- Each retrieved model and its rigid transform in meshlab .aln format (<dataset>_reconstruction.aln) and/or

- The retrieved models as a single .ply

Acknowledgements

We would like to thank IKEA for granting us scanning access.License

The data has been released under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 License.|

|

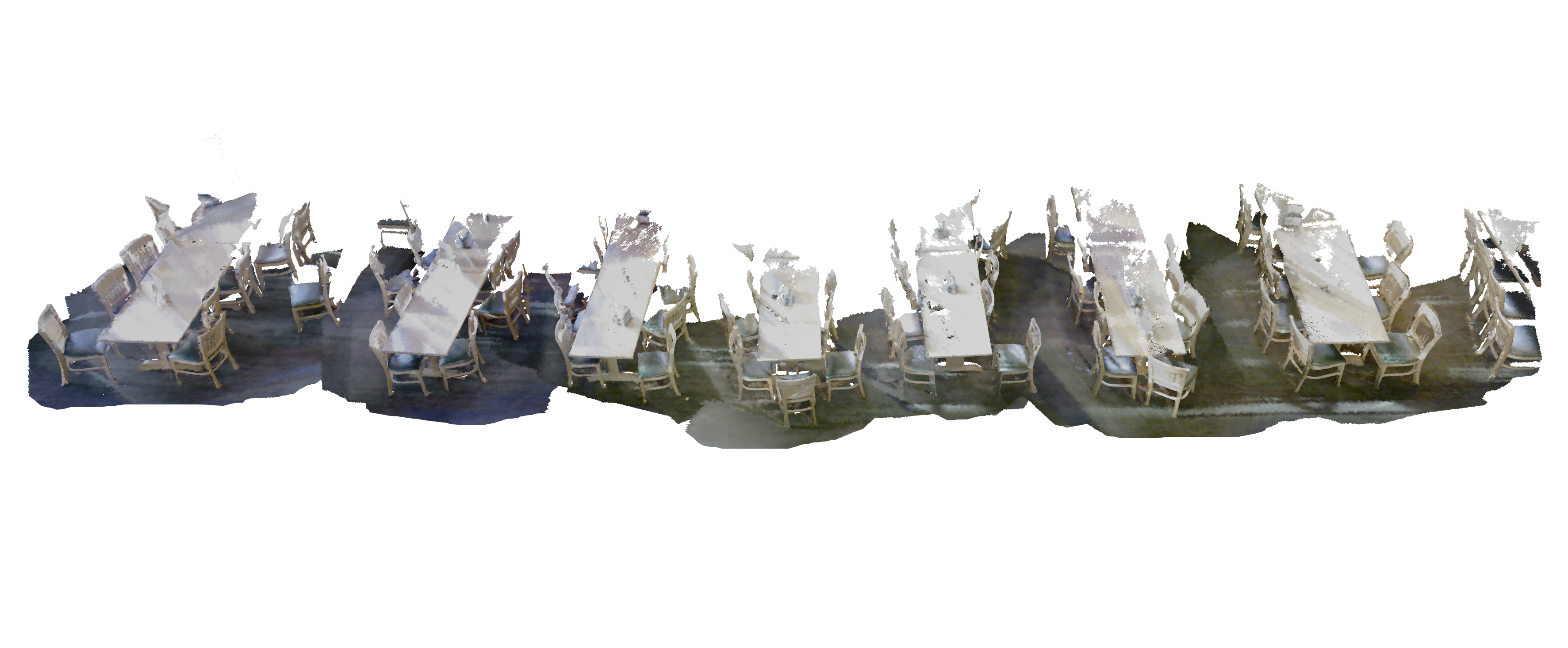

Dining HallRGB-D data: Retrieved Models: dininghall_reconstruction.zip |

|

|

|

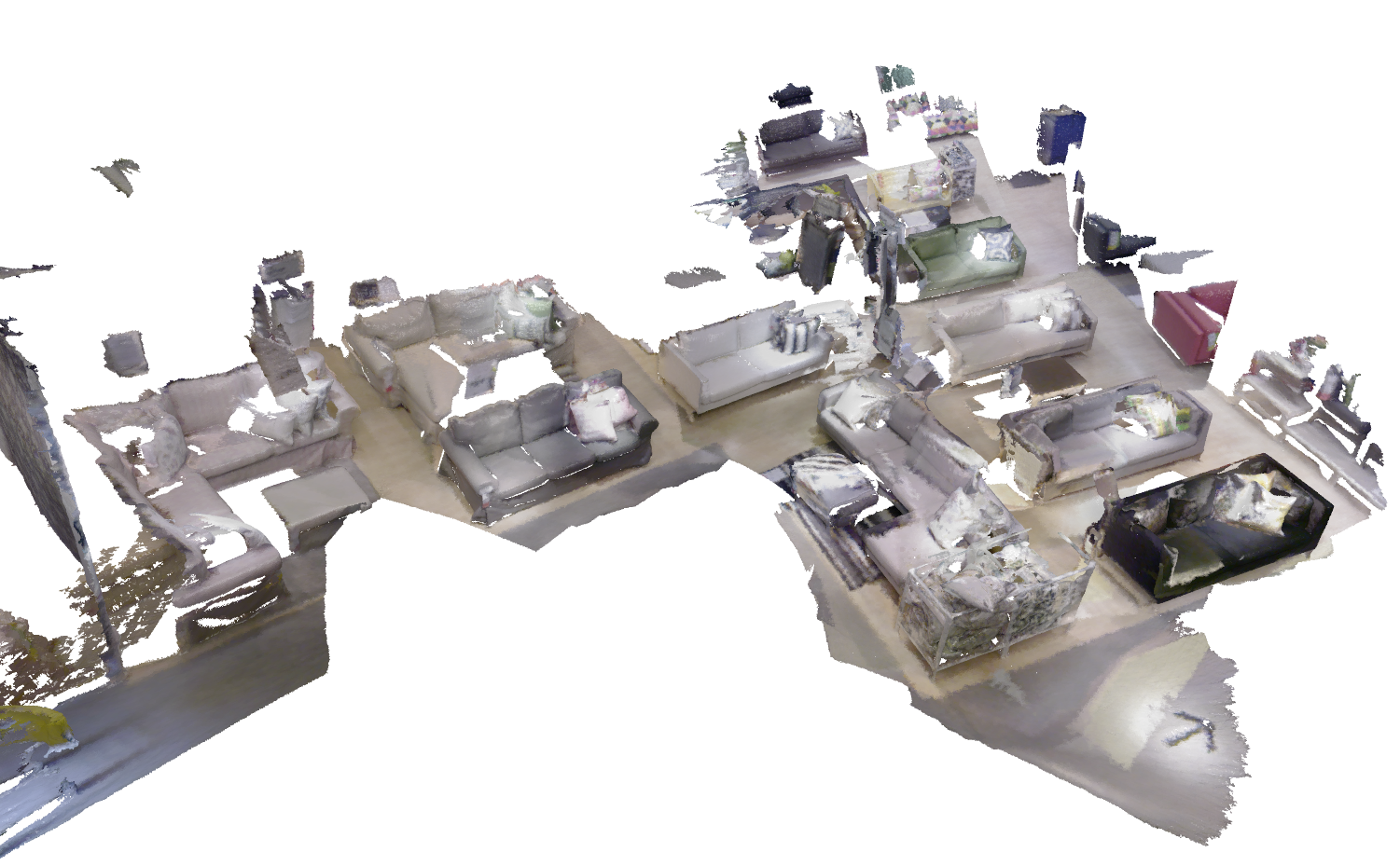

IKEA CouchesRGB-D data: Retrieved Models: ikea-couches_reconstruction.zip |

|

|

|

IKEA ChairsRetrieved Models: ikea-chairs_reconstruction.zip |

|

|

|

IKEA TableRetrieved Models: |

|

|

|

OfficeRetrieved Models: |

|

|

|

Chairs |

|

|

|

Single Chair |

|