Starcraft 2 Automated Player

This is a program that plays Starcraft 2 (SC2) by intercepting, understanding, and reacting to the D3D9 API stream, then sending keyboard and mouse commands back to SC2. It uses the D3D9 API Interceptor project. It is not at all like AIs made in the SC2 editor using the built in scripting language, or projects like BWAPI (which is for the original Starcraft only) that work by attaching to the address space of the host application. AIs written using these other methods are often able to bypass several restrictions that all human players must cope with; for example, they can simultaneously issue different actions to different units, they do not have an active viewport and are thus able to see exactly what is occurring off-screen at all times, and they do not have to deal with trying to click on ground units that are covered by flying units. I chose this method both because I both found it more novel than many other approaches taken to automation problems, and because being a computer graphics programmer it is easier for me to work with the graphics API calls rather than the SC2 code itself. Furthermore, working with the API calls generalizes more easily to interfacing with different applications. Of course, this AI still has a significant APM advantage over human players.

Note that this program does not interact with any Starcraft 2 code anymore than your graphics driver and audio driver interact with Starcraft 2. Even so, the primary purpose of this page is to provide a sample program that interfaces with the D3D9 interceptor and interprets the intercepted commands. By itself all the provided code will do is allow you to look at frame captures and the like (which is no more information than running Starcraft 2 under PIX for Windows would give you.)

Overview

This project compiles to d3d9Callback.dll, which is fed SC2's API stream by the interceptor's d3d9.dll. There are three main components of the AI, each of which relies heavily on the previous component:

- Mirror Driver — In order to understand what is being rendered, the AI maintains its own copy of all graphics objects. This means it has its own copy of all the textures, shaders, and device state that will be relevant to decision-making. For example, when SC2 calls D3D9Device::DrawIndexedPrimitive(...), the mirror driver maintains enough state the understand that the "Mutalisk" texture is currently bound, and can compute the coordinates of the mutalisk on the screen.

- Scene Understanding — The AI maintains a wide amount of high level information about each frame. Every time the mirror driver receives a new render call, based on the type of call and the current state of the graphics pipeline, the call is processed and recorded. For example, the AI maintains a list of all units on screen, the current action buttons, the currently selected units, the presence of the idle worker or warp gate button, the current minimap, etc.

- Decision Making — Once the frame render is completed, the AI needs to decide on an action to take based on what it can currently observe. This high-level view is where the brains of the AI rests. In order to act intelligently a significant amount of persistent state about the current game is maintained, such as the content of all control groups, all units that the AI has built, and all enemy units that have been observed. The AI uses an entity-based decision making process, where each entity gains atomic control over the keyboard and mouse input for a limited period of time, and a task scheduler decides which entity should have access next once the previous entity surrenders control.

Mirror Driver

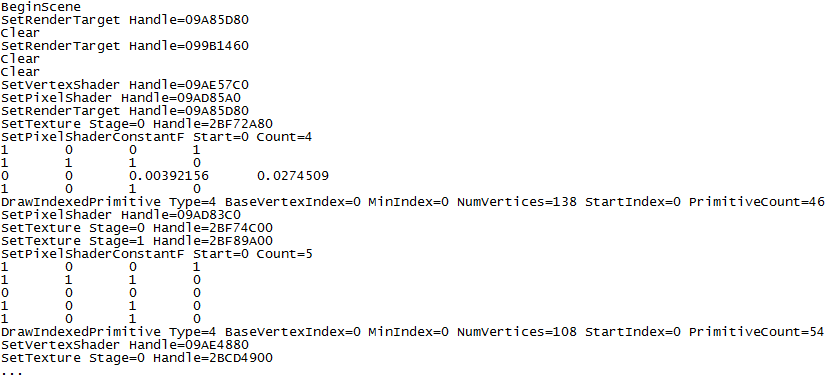

The AI receives a constant stream of API calls from the API interceptor. The need for a mirror driver can be seen by looking at a partial dump of this stream. Note that only relevant function parameters are shown.

When SC2 calls SetTexture, all we receive is a handle to the texture. In order to understand what the render calls that uses this texture mean, we need to know what this texture represents. Although in theory we could read this data directly from the graphics card every time it is set, this would be exceedingly expensive. Instead, the AI records this information whenever the content of the texture changes, and maintain a mirror copy of each texture. Thus, SetTexture is just a simple map lookup to acquire the relevant information about the corresponding texture. Here is a list of graphics objects that the mirror driver maintains and what they are used for:

- Textures — Textures are the easiest and most reliable way to identify a render event. I maintain a comprehensive database that maps textures to names. Whenever a new texture is encountered, it is looked up in the database of known textures. If the texture is known, only its name (for example "mutalisk HUD icon") is stored. Sometimes the texture is discarded outright; for example, it might be a procedurally generated texture. Other times the entire texture might need to be stored; for example, fonts need to be stored so on-screen text can be read (see below.) If the texture is not already known and cannot be classified as procedurally generated or a font, it is dumped to a "Captured Textures" directory for future classification.

- Vertex and Index Buffers — The vertex and index buffers contain the raw vertex and index data. Without these the AI wouldn't know how to assemble the rendered geometry into sets of triangles.

- Vertex and Pixel Shaders — Vertex shaders define the mapping from raw vertex data to the screen coordinate of each vertex. Knowing this mapping is necessary because if we don't know the screen coordinates of a render event, we won't be able to click on it, and spatial reasoning is important for building placement and micromanagement. The pixel shader is less important; we rarely need to know the exact color of a pixel, which is good because rasterizing the screen in software would be expensive. The times where we do need to know the exact pixel (such as fonts), the pixel shader operation is generally very simple (texture copy). Unfortunately, the interceptor doesn't have access to the original HLSL code; all the interceptor is able to acquire is the shader's compiled bytecode which I wrote a simple disassembler, interpreter, and compiler for.

- Device State — All D3D devices contain a large amount of internal state. These include the current viewport, the current render state variables (i.e. SetRenderState calls), which shaders, geometry buffers, and textures are currently bound, the pixel and vertex shader constants, and more. This state is central to scene understanding; for example, the currently bound textures are used to classify render events, and the pixel shader constants are used to identify the color of a rendered unit.

Sample Frame Capture

A common development scenario goes something like this: "The AI needs to know some graphical property X about the current frame." For example, X might be the current minerals, gas, and supply. Because this is a very common situation, a lot of infrastructure has been developed to make capturing and dissecting frames into their constituent render events as simple as possible. Specifically, whenever the capture key is pressed, for the next frame every API call will be dumped to a file, the front buffer will be saved to a file after every render event, and a table of all render calls and the AI's classification of each event is generated. As an example, we'll look at all the render events used to generate the following frame:

This table shows a complete decomposition of every render event (in render events 0 -> 15, the texture is not used by the shaders and I assume they are doing a Z-prepass. I have no idea who the kid is.) Note that many of the important textures, such as the units and grid icons, have already been classified by looking them up in the texture database. To find a specific render event, you can just look at the render target after each render to see which render call relates to the event you're interested in. For example, the render event corresponding to the current resource levels is render 107:

| Index | Texture 0, RGB | Texture 0, Alpha | Texture Name | Render Type | Primitive Count | Vertex Shader | Pixel Shader | Coordinates | Text |

| 107 |

|

|

Font | TriangleList | 28 | 05ca9bb9 | 0654ccb0 | 869, 13 | 9/28@0@50 |

Because Blizzard uses a wide range of glyphs in a variety of sizes, they use a font cache texture that contains only the necessary glyphs. Fonts are then rendered as sets of rectangles whose texture coordinates index into the texture cache (if a needed glyph is not present, the texture is locked and updated.) To verify this, we can look at the disassembly of the pixel shader bytecode for this render event:

Briefly, the dcl_ statements define the mapping from vertex shader outputs to local pixel shader registers. For example, dcl_texcoord0 v0.xy means that "v0 is the interpolated texture coordinate index 0 that the vertex shader outputted." tex is a command to sample a texture, so tex r0, v0, SAMPLER0 says "sample texture index 0 (in this case the font texture) at texture coordinate v0.xy and store the result in r0." Finally, mul does component-wise multiplication of r0 by the interpolated vertex color, storing the result in co0, which is the final color output register that gets written to the frame buffer. So ultimately, this pixel shader just does a trivial texture copy. Most vertex and pixel shaders used by SC2 are much more complicated than this.

So to read a font render event, we start by looking up the texture coordinates for each rectangle, and then look up the corresponding pixels in the font cache bitmap. To actually convert this into ASCII, the AI uses a simple database lookup scheme similar to what it uses for textures. If the character matches a glyph bitmap in the database, its identity is read from the database, otherwise it is saved to a file for later classification. Because text render events will often span disconnected regions of the screen, the "@" symbol is used to denote that the screen space coordinates of two glyphs are not adjacent. So for this render event, the text is read as "9/28@0@50". Simple string parsing is used to interpret this as "you currently have 50 minerals, 0 gas, and 9/28 supply."

Scene Understanding

The set of all information gathered from the render events is accumulated and stored by the AI for use in the decision-making stage. Specifically, the following information is acquired:

- Units — All rendered units and their screen-space extents, player color, etc. are recorded. As can be seen in render events 19 and 20 in the table, a single unit's texture may be used in multiple render events. So to avoid counting a single unit multiple times, a unit render event is identified by a unit's texture being bound and a specific number of triangles being rendered.

- Text — All text is captured using the method above. Text is classified based on the screen coordinates of the event. Important text events include the current resource levels, the selected unit's name, HP, Energy, and Shields, the number of idle workers and idle warp gates, enemy chat text, etc.

- Portraits — When multiple units are selected, they show up as a set of portraits in the unit info region. These are all recorded, as well as various properties such as the health of each unit, and for production buildings the number of units currently in production.

- Building Queue — When a production building is selected, the contents of the build queue are stored.

- Health Bars — All health, energy, and shield bars are saved and then a matching between units and health bars is constructed.

- Action Buttons — The grid in the lower right, which stores all available actions, is saved. It is also noted whether each button is active, disabled, cooling down, out of energy, or selected.

- Minimap — The minimap is periodically captured, along with the location of the viewport rectangle.

- HUD State — Various other aspects of the HUD are recorded, such as the presence of the idle worker or warp gate buttons, and the number of levels in the selected control group.

Decision Making

The AI decides what actions to take by combining information about the current frame with a large amount of knowledge it maintains about the state of the current game. Often, the AI will want to take multiple actions, and these actions need to be prioritized and serialized in a manner equivalent to OS threading models. We define a program to be a task that requires uninterrupted control over SC2 (in an OS, these would be considered atomic operations.) The AI maintains a set of threads, each of which can submit programs for execution. When no threads are running, the AI selects a program that one of the threads has submitted for execution. Each program decides for itself when to relinquish control of the keyboard and mouse to another program. Just like an OS, programs need to execute in a reasonable amount of time to allow as much multitasking as possible. Example of program tasks include: order one of the idle workers, order a queen to spawn larvae, or build a stargate. Programs can complete even if they were not successful; for example, a "build a gateway" program may not be able to find a valid location within a pylon field, or a "spawn larvae" program might not be able to find any queens. This return status is reported back to the thread that submitted the program, all threads update and submit new work (if any,) and the process repeats until the game ends. When submitting a program, threads specify a priority. This priority is used to decide which thread gets to execute next; if there is a tie, the thread that has waited the longest time since its last program was executed gets priority.

Taken together, the threads are the main intelligence of the AI. Below is a list of threads and what they do:

- Control Group Check — The AI uses all ten control groups extensively. Periodically (approximately every five seconds) this thread submits a program that checks the contents of all ten control groups. This helps the AI realize when buildings have been destroyed, which production buildings are not producing units, etc. This program only checks the 1st level of each control group.

- Army Check — The AI frequently queries all levels of the army control group. This is used to make retreat and assault decisions. This is separate from the control group check program so that the frequency of these two programs can be decoupled.

- Army — This thread submits programs that order the entire army to retreat or attack. It is also in charge of scouting with one of the army units.

- Worker — Whenever an idle worker is detected, this thread submits a program to assign that worker a new task.

- Gas Worker — Occasionally it is possible that workers get accidentally pulled off gas or destroyed. When this is detected, this thread will wake up and update the gas worker assignments.

- Micro — This thread wakes up when the AI detects that its units are engaging in a battle. It goes through all units and assigns them actions, if needed. The ability of the AI to rapidly decide on and issue a large number of orders in battle situations is one of its key advantages over human players.

- Production — Whenever the AI detects that it has enough resources to build the next unit, this thread wakes up and submits a program to build that unit. The build order is controlled by this thread.

- Search — This is the AI's idle thread. This thread explores a random visible region, and adds units in that region to their corresponding control groups. It can also detect incoming trouble, such as the presence of cloaked units that will not trigger on the minimap.

- Overlord — This race-specific thread handles overlord scouting and retreating.

- Chrono Boost, Queen, Orbital Command — These race-specific threads submit programs that cast the Spawn Larvae, Chrono Boost, and MULE abilities.

State Maintenance and Bookkeeping

When making decisions, some information can be inferred immediately from the current frame. For example, the current resources can immediately tell you if you have enough minerals, gas, and supply to build a certain unit. On the other hand, some information must either be rediscovered or remembered based on information previous frames, such as whether you have a larvae available to be turned into a new unit. Many tasks can be approached by relying on differing amounts of state information. Consider two approaches to the task of putting military units into control groups. Approach A: whenever a unit is produced, insert it into an internal queue, and one second after the unit will complete production, go to the building that built the unit and add it to the control group. Approach B: every five seconds, go to each production building and add all nearby military units to control groups. At first glance, relying heavily on state-based solutions like approach A might seem simple and effective for a computer. Unfortunately, a large number of errors can (and will) occur: lag might slow down the game and mean that the unit is not actually ready after the predicted time, the AI might select another nearby unit of the same type, etc. These problems, even if they occur infrequently, are severe: units that are not added to the control group might never be added for the rest of the game. Approach B, on the other hand will, given enough time, guarantee that all units eventually get added to their correct control groups. This problem occurs in many other areas of the AI and relying too heavily on state can be devastating, especially when the enemy acts in unpredictable ways. A general idea is that almost no state should be infinitely persistent, and given enough time, the AI should always update its internal state based on new evidence rather than relying on its memory of the game state. Achieving this goal also has the added benefit that the AI interacts well with a human; if a human disables the AI to perform some action, then enables the AI, the AI will eventually adapt to the changes the human made even if it didn't actually understand the actions the human took.

Here is a list of most of the persistent game state stored by the AI:

- Control Groups — When the control group check program scans all the control groups, it is stored so production and assault decisions can be made.

- Bases — A list of who controls all bases on the map and what buildings have been observed at each.

- Research — A list of all research that has been completed or is currently being researched.

- Production Buildings — A list of all production buildings, what they are producing, and when they will next be available to produce more units.

- Construction — A list of structures currently being built and when they will finish.

- Worker Allocation — A list of how many workers are on minerals at each base, and how many workers have been assigned to each vespene geyser.

- Minimap History — The AI stores the last time each location on the minimap has been scouted. This is used to determine which bases need scouting next.

While the AI relies to a limited extent on the accuracy of this state information, it will eventually correct all state errors. For example, if it believes a Cybernetics Core should have finished at t = 200s, but has not yet observed a Cybernetics Core by t = ~210s, it will assume that the Cybernetics Core was either destroyed, cancelled, blocked by allied or enemy units, etc., and build another one. While it may seem that when these problems occur they could be detected and corrected, there will always be problems that are overlooked, and failing to build in extreme contingency plans will occasionally result in a stagnant and ineffectual AI.

Databases

A variety of databases are maintained that the AI relies on to function.

- Texture Database — A list of all textures and their classifications. When a classified texture is encountered, only its ID needs to be stored; if the original texture was for some reason needed, it can simply be looked up from the database. Although some scenery and terrain textures are classified, there are still many thousands of unclassified textures, however these do not affect the AI's decisions. When unclassified textures are encountered they are dumped to a directory for later classification.

- Glyph Database — A list of all glyphs and their ASCII transcription. When unclassified glyphs are encountered they are dumped to a directory for later classification.

- Unit Database — A variety of stats for all units are kept. Fields include resource and supply cost, race, class (combat, worker, building, etc.), tech requirements, attack types and range, build time, hotkey, and the number of triangles in the unit's dominant render call. The unit database is provided in the code listing.

- Maps — There are several programs that do extensive map analysis for Starcraft I. For SC2 I do my own map annotation using a program written just for this purpose. Each base on the map is annotated with the geyser, ramp, and approximate build locations, as well as a good location to assault the ramp from, and whether the base is a debris-blocked or island base.

- Personas — The AI maintains a set of personas it adopts when fighting humans. These are designed to intimidate the person with the AI's ability to type very complicated and computationally intensive sentences in the middle of battles. Currently this feature is not well developed since it does not affect the win-loss ratio much and I've decided not to unleash the AI on hapless Battle.net players, but I may revisit it eventually.

Console Overlay

The interceptor provides a simple interface for displaying text to the screen. This is very useful for debugging the AI by verifying that the AI is capturing the frame information correctly, that its internal knowledge base is correct, debug thread priority problems, and see which programs are failing and why.

Here the left console is showing the frame rate, the current grid actions available, statistics about its army, what units it is trying to produce, what units are currently in production and how long it will take them to complete. The 2nd console shows recently performed actions. The 3rd console shows the output of recently executed programs and provides easily accessible "cout" or "printf" functionality to programs. Finally, the right-most console shows the state of each thread (gray threads are asleep, green threads are currently executing, yellow threads have no work, and red threads have work ready but are blocked by another thread.) Clearly, these consoles can be changed based on what parts of the AI are being modified, and in general, they are extremely effective at investigating issues and understanding why the AI is acting in a certain way.

Vertex Shader Simulation

The AI needs to know where units are on the screen in order to reason about army distribution and flanking, determine what safe retreat or attack directions are, and to click on units. The position stored in a vertex buffer, however, is in object-space and must be transformed by the currently bound vertex shader to determine the screen-space coordinates. This transformation can be very expensive for long and complicated vertex shaders and requires parsing and emulating the shader bytecode. One approach to avoid this is to use the IDirect3DDevice9::ProcessVertices method, however this requires software vertex processing and is very slow; typically I observed a fps hit of a factor of at least four which severely lowers the AI's APM throughput.

To solve this problem I use a deferred shader compiler with a software emulation fallback. The AI maintains a list of shaders that it has a C++ implementation of. When a vertex shader is encountered, if there is already a C++ implementation, it is used to transform the vertices to screen space. Otherwise, the AI builds a C++ implementation of the shader using a very mechanical process, and sets it up so this will be compiled the next time the AI is compiled. In the meantime, the bytecode is emulated (which is extremely slow, but still lets the AI handle newly encountered shaders elegantly.) You can see what the C++ implementation looks like here.

Miscellaneous

- Input Throttling — SC2 can accept an arbitrary number of keyboard commands per frame. However, if a mouse move and mouse click event occur in the same frame it will be interpreted as a drag event, so normally a click takes at least two frames. This is not a significant problem, since most times we won't know where we want to click until the first click resolves and the AI can see the new frame.

- Graphics Settings — Because the number of actions the AI can take is limited by the frame rate, the AI only plays on lowest graphics settings and will not recognize textures if a higher graphics quality is used (although it could easily be trained for this.) For simplicity, it uses ally color mode which makes playing on a team with human allies easier. Finally, to make debugging easier and for slight performance gains, it always plays in a window. Normally I use 1024x768; modifying the size modifies the font resolution used and requires relabeling a large number of glyphs.

- Parameter Files — Because the interceptor and AI are both located in DLLs they cannot take command line parameters, and even if they could I prefer configuration files. Both the AI and interceptor require separate configuration files.

- Builds — The production thread executes the specified build. For now, I have hard-coded a variety of strategies for the races and select one at random. I am working on improving the builds and making them more reactive.

Results and Performance

The most interesting part of the AI is watching it play and abuse its ability to perform an absurd number of actions per minute (APM). The AI has a "competitive" mode that it uses to achieve very high frame rates and consequently a very large number of actions per second. In this mode, the AI disables all logging, discards all but the most essential GPU rendering events, and does not output to the console. This achieves an "idle" APM of about 500 and its battle APM is between 1000 and 2000 (as measured by SC2's replay counter.) Not all of these actions are useful and battle micro is a very difficult thing to algorithmitize; occasionally its battle commands might be useless or even detrimental compared to the default combat behavior.

At the moment the I have tweaked my AI so that it will always beat all the non-insane computer opponents on all ladder maps and all possible race matchups. Its primary purpose is to defeat human play-styles, so these are not excellent tests, but they do demonstrate its feasibility to win in a standard matchup against a non-trivial opponent. Viewed from the ivory tower of theoretical machine learning, the availability of "independent and identically distributed" opponents provided by Battle.net is a rich opportunity to quantify and improve the AI's skill set. Given enough time to play games on Battle.net, the AI can perform simple variable optimization to determine properties such as what army size is appropriate for attacking. This could then be extended to more complex learning, such as examining replay files (both its own collection and downloaded replays from professional matches) and datamining these to improve its performance. Unfortunately, I have not yet explored any of these options because it is unlikely that random Quick Match players would consent to playing against the AI and thus far I have only tested it on the computer (via the map editor) and my friends (which is the only time I have played it on Battle.net). I consequently also can't provide a ranking for the AI in the SC2 league system, but it would likely rank wherever the "very hard" AI difficulty ranks (possibly slightly lower, as I have not extensively tested it against extremely aggressive rushes.)

Video

Capturing the AI in video format is no simple task. The AI severely messes with the D3D9 command stream and game capture techniques like the one used in FRAPS do not work. While the AI has fast access to the front buffer, it is a significant performance hit for it to compress this to a file each frame. For lack of a better option, at the moment I use my own Video Capture program which can capture SC2 at a measly 15 frames per second on my best computer. This slow rate is due to the fact that the AI never looks at one part of the screen for more than a few frames and the compressor must constantly insert new keyframes to avoid massive compression artifacts. My capture program is also unable to capture the mouse correctly for Starcraft 2, making the action even harder to follow. In its competitive mode, the AI achieves approximately 3 milliseconds per frame (~333 frames per second), so the AI would normally be taking 22 actions for every one frame in the video. Since video capture, logging, console output, and GPU rendering all slow down the game, the frame rate during video captures is approximately 60 fps, so it is only missing approximately one in every four frames. Although I think my current AI videos are an extremely poor depiction of the AI's abilities, I've decided posted one online anyways until I can better solve the video capture problem. I apologize that nothing terribly interesting happens in the video, but I hope it at least serves to demonstrate the AIs most basic mechanisms such as constructing buildings, training units, controlling its army, and scouting. Unfortunately the low frame rate makes it almost impossible to follow high frequency actions like its impressive blink micro.

Code

The code for the mirror driver part of the AI is given here. The reactive part of the AI requires its texture, font, map, etc. databases, which are not going to be posted as the main reason this code is online is to show how to build a version of d3d9Callback.dll that interacts with the d3d9.dll produced by the D3D9 API Interceptor project. If you attach the resulting d3d9.dll and d3d9Callback.dll to any application that uses D3D9, the ability to generate the frame captures, intercept textures, etc. will still work fine (the AI only takes actions when it recognizes a SC2 scene.) The code for the map annotator used to classify information about all the SC2 ladder maps is also provided.

This code is all based off my BaseCode. Specifically you will need the contents of Includes.zip on your include path, Libraries.zip on your library path, and DLLs.zip on your system path.

SC2MapAnnotator Code Listing

Total lines of code: 608

![]() D3D9CallbackSC2.zip (includes project file)

D3D9CallbackSC2.zip (includes project file)

![]() AIParameters.txt

AIParameters.txt

![]() Units.txt

Units.txt

D3D9CallbackSC2 Code Listing

Total lines of code: 18063