Publications 2019

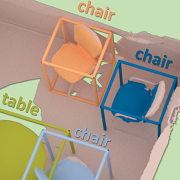

3D-SIS: 3D Semantic Instance Segmentation of RGB-D Scans

CVPR 2019 (Oral)

We introduce 3D-SIS, a novel neural network architecture for 3D semantic instance segmentation in commodity RGB-D scans.

[paper] [video] [bibtex] [project page]

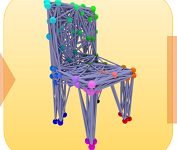

Scan2CAD: Learning CAD Model Alignment in RGB-D Scans

CVPR 2019 (Oral)

We present Scan2CAD, a novel data-driven method that learns to align clean 3D CAD models from a shape database to the noisy and incomplete geometry of an RGB-D scan.

[paper] [video] [bibtex] [project page]

Scan2Mesh: From Unstructured Range Scans to 3D Meshes

CVPR 2019

We introduce Scan2Mesh, a novel data-driven approach which introduces a generative neural network architecture for creating 3D meshes as indexed face sets, conditioned on an input partial scan.

[paper] [bibtex] [project page]Publications 2018

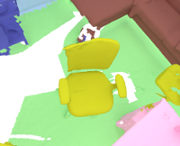

3DMV: Joint 3D-Multi-View Prediction for 3D Semantic Scene Segmentation

ECCV 2018

We present 3DMV, a novel method for 3D semantic scene segmentation of RGB-D scans in indoor environments using a joint 3D-multi-view prediction network. In contrast to existing methods that either use geometry or RGB data as input for this task, we combine both data modalities in a joint, end-to-end network architecture.

[paper] [code] [bibtex] [project page]

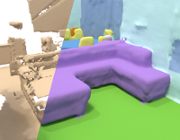

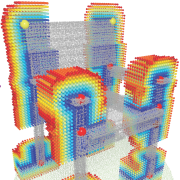

ScanComplete: Large-Scale Scene Completion and Semantic Segmentation for 3D Scans

CVPR 2018

We introduce ScanComplete, a novel data-driven approach for taking an incomplete 3D scan of a scene as input and predicting a complete 3D model along with per-voxel semantic labels. The key contribution of our method is its ability to handle large scenes with varying spatial extent, managing the cubic growth in data size as scene size increases.

[paper] [video] [code] [bibtex] [project page]Publications 2017

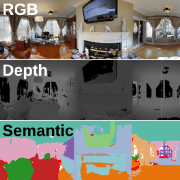

Matterport3D: Learning from RGB-D Data in Indoor Environments

3DV 2017

In this paper, we introduce Matterport3D, a large-scale RGB-D dataset containing 10,800 panoramic views from 194,400 RGB-D images of 90 building-scale scenes.

[bibtex] [project page]

3DLite: Towards Commodity 3D Scanning for Content Creation

ACM Transactions on Graphics 2017 (TOG)

We present 3DLite, a novel approach to reconstruct 3D environments using consumer RGB-D sensors, making a step towards directly utilizing captured 3D content in graphics applications, such as video games, VR, or AR. Rather than reconstructing an accurate one-to-one representation of the real world, our method computes a lightweight, low-polygonal geometric abstraction of the scanned geometry.

[paper] [video] [bibtex] [supplemental] [project page]

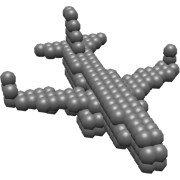

Shape Completion using 3D-Encoder-Predictor CNNs and Shape Synthesis

CVPR 2017 (Spotlight)

We introduce a data-driven approach to complete partial 3D shapes through a combination of volumetric deep neural networks and 3D shape synthesis. From a partially-scanned input shape, our method first infers a low-resolution -- but complete -- output.

[paper] [bibtex] [project page]

ScanNet: Richly-annotated 3D Reconstructions of Indoor Scenes

CVPR 2017 (Spotlight)

We introduce ScanNet, an RGB-D video dataset containing 2.5M views in 1513 scenes annotated with 3D camera poses, surface reconstructions, and semantic segmentations.

[paper] [bibtex] [project page]

ACM Transactions on Graphics 2017 (TOG)

We introduce a novel, real-time, end-to-end 3D reconstruction framework, with a robust pose optimization strategy based on sparse feature matches and dense geometric and photometric alignment. One main contribution is the ability to update the reconstructed model on-the-fly as new (global) pose optimization results become available.

[paper] [video] [bibtex] [project page]Publications 2016

Volumetric and Multi-View CNNs for Object Classification on 3D Data

CVPR 2016 (Spotlight)

In this paper, we improve both Volumetric CNNs and Multi-view CNNs by introducing new distinct network architectures. Overall, we are able to outperform current state-of-the-art methods for both Volumetric CNNs and Multi-view CNNs.

[paper] [bibtex] [project page]Publications 2015

Shading-based Refinement on Volumetric Signed Distance Functions

ACM Transactions on Graphics 2015 (TOG)

We present a novel method to obtain fine-scale detail in 3D reconstructions generated with low-budget RGB-D cameras or other commodity scanning devices.

[paper] [video] [bibtex] [project page]

Database-Assisted Object Retrieval for Real-Time 3D Reconstruction

Eurographics 2015

We present a novel reconstruction approach based on retrieving objects from a 3D shape database while scanning an environment in real-time. With this approach, we are able to replace scanned RGB-D data with complete, hand-modeled objects from shape databases.

[paper] [video] [bibtex] [project page]Publications 2014

Combining Inertial Navigation and ICP for Real-time 3D Surface Reconstruction

Eurographics 2014

We present a novel method to improve the robustness of real-time 3D surface reconstruction by incorporating inertial sensor data when determining inter-frame alignment. With commodity inertial sensors, we can significantly reduce the number of iterative closest point (ICP) iterations required per frame.

[paper] [video] [bibtex] [project page]