Publications

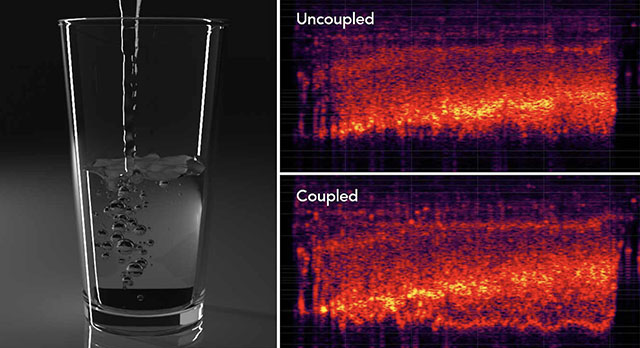

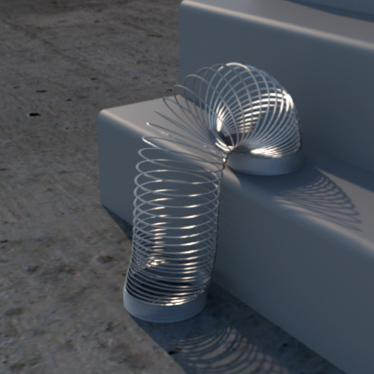

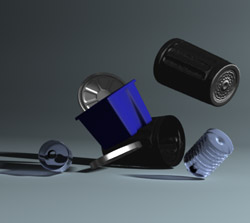

WaveBlender: Practical Sound-Source Animation in Blended Domains

Kangrui Xue | Jui-Hsien Wang | Timothy R. Langlois | Doug L. James

ACM SIGGRAPH Asia 2024

Synthesizing sound sources for modern physics-based animation is challenging due to rapidly moving, deforming, and vibrating interfaces that produce acoustic waves within the air domain. Not only must the methods synthesize sounds that are faithful and free of digital artifacts, but, in order to be practical, the methods should be easy to implement and support fast parallel hardware. Unfortunately, no current solutions satisfy these many conflicting constraints.

In this paper, we present WaveBlender, a simple and fast GPU-accelerated finite-difference time-domain (FDTD) acoustic wavesolver for simulating animation sound sources on uniform grids. To resolve continuously moving and deforming solid- or fluid-air interfaces on coarse grids, we derive a novel scheme that can temporally blend between two subsequent finite-difference discretizations. Our blending scheme requires minimal modification of the original FDTD update equations: a single new blending parameter β (defined at cell centers) and approximate velocity-level boundary conditions. Sound synthesis results are demonstrated for a variety of existing physics-based sound sources (water, modal, thin shells, kinematic deformers), along with point-like sources for tiny rigid bodies. Our solver is reliable across different resolutions, GPU-friendly by design, and can be 1000x faster than prior CPU-based wavesolvers for these animation sound problems.

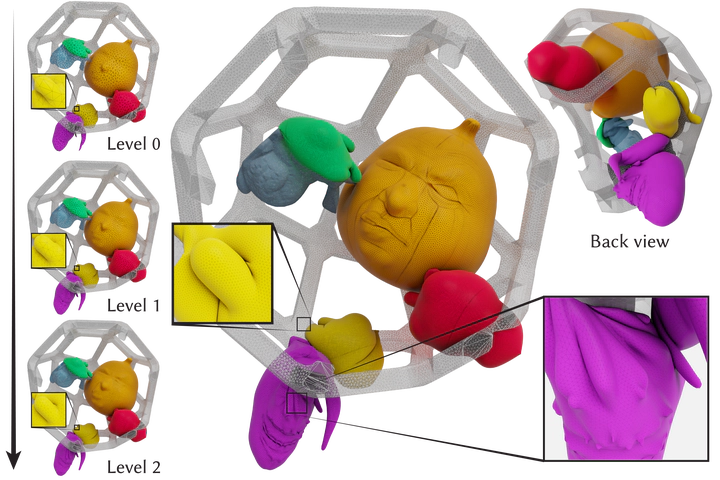

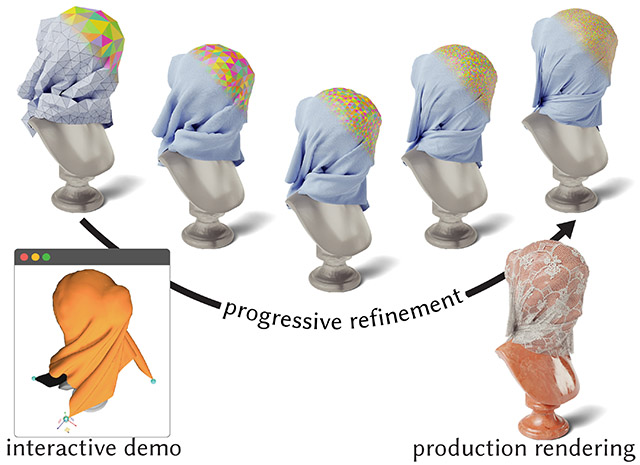

Progressive Dynamics for Cloth and Shell Animation

Jiayi Eris Zhang | Doug L. James | Danny M. Kaufman

ACM Transactions on Graphics (SIGGRAPH 2024)

We propose Progressive Dynamics, a coarse-to-fine, level-of-detail simulation method for the physics-based animation of complex frictionally contacting thin shell and cloth dynamics. Progressive Dynamics provides tight-matching consistency and progressive improvement across levels, with comparable quality and realism to high-fidelity, IPC-based shell simulations [Li et al. 2021] at finest resolutions. Together these features enable an efficient animation-design pipeline with predictive coarse-resolution previews providing rapid design iterations for a final, to-be-generated, high-resolution animation. In contrast, previously, to design such scenes with comparable dynamics would require prohibitively slow design iterations via repeated direct simulations on high-resolution meshes. We evaluate and demonstrate Progressive Dynamics's features over a wide range of challenging stress-tests, benchmarks, and animation design tasks. Here Progressive Dynamics efficiently computes consistent previews at costs comparable to coarsest-level direct simulations. Its matching progressive refinements across levels then generate rich, high-resolution animations with high-speed dynamics, impacts, and the complex detailing of the dynamic wrinkling, folding, and sliding of frictionally contacting thin shells and fabrics.

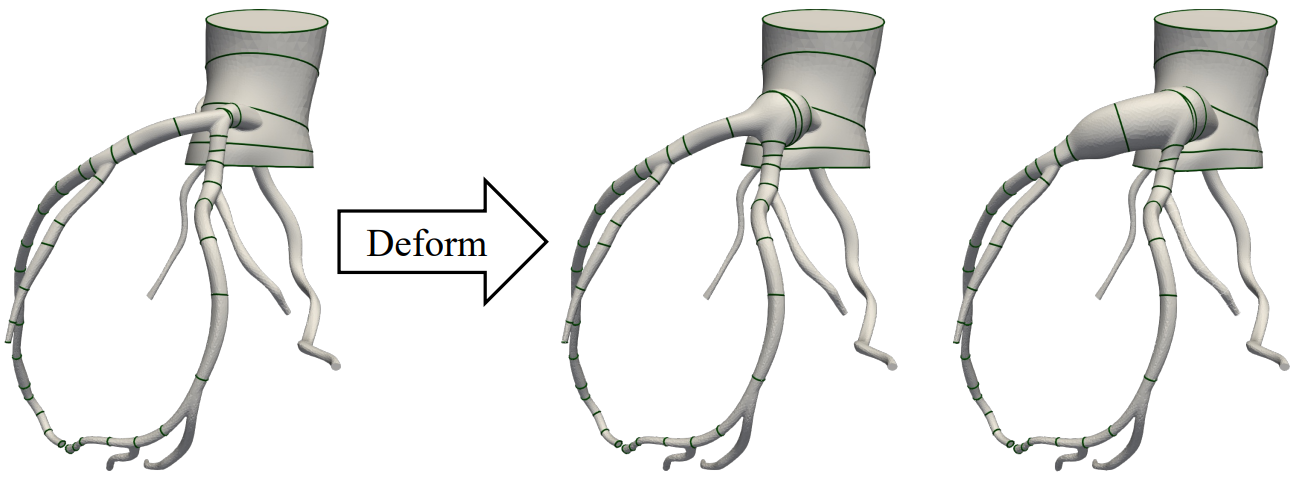

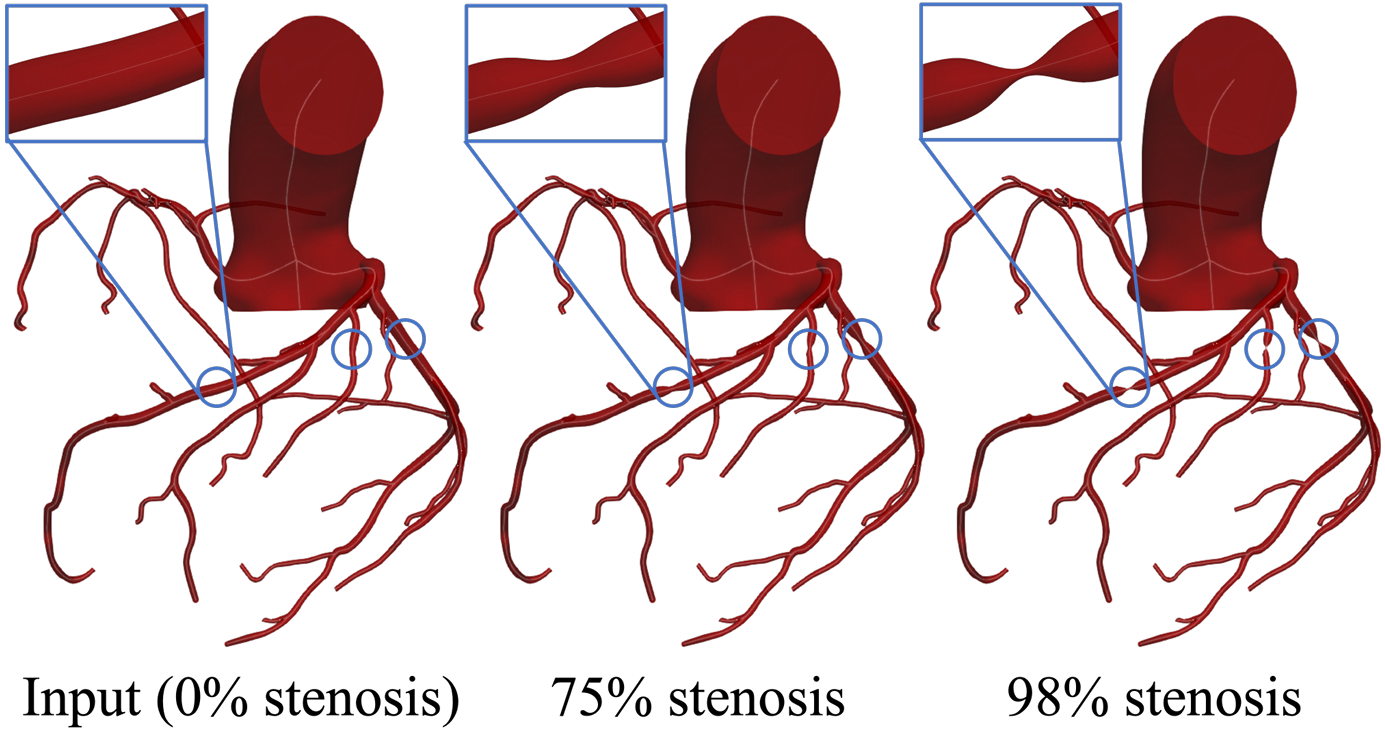

Deforming Patient-Specific Models of Vascular Anatomies to Represent Stent Implantation via Extended Position Based Dynamics

Jonathan Pham | Fanwei Kong | Doug L James | Jeffrey A Feinstein | Alison L Marsden

Cardiovasc Eng Technol. 2024 Dec;15(6):760-774.

Purpose: Angioplasty with stent placement is a widely used treatment strategy for patients with stenotic blood vessels. However, it is often challenging to predict the outcomes of this procedure for individual patients. Image-based computational fluid dynamics (CFD) is a powerful technique for making these predictions. To perform CFD analysis of a stented vessel, a virtual model of the vessel must first be created. This model is typically made by manipulating two-dimensional contours of the vessel in its pre-stent state to reflect its post-stent shape. However, improper contour-editing can cause invalid geometric artifacts in the resulting mesh that then distort the subsequent CFD predictions. To address this limitation, we have developed a novel shape-editing method that deforms surface meshes of stenosed vessels to create stented models.

Methods: Our method uses physics-based simulations via Extended Position Based Dynamics to guide these deformations. We embed an inflating stent inside a vessel and apply collision-generated forces to deform the vessel and expand its cross-section.

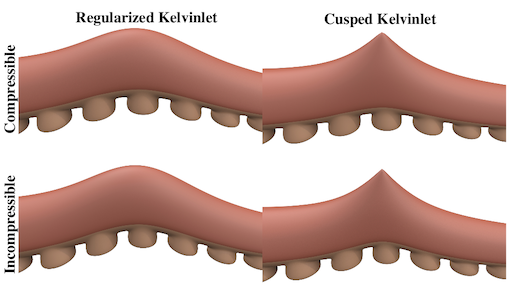

Virtual shape-editing of patient-specific vascular models using Regularized Kelvinlets

Jonathan Pham | Fanwei Kong | Doug L. James | Alison L. Marsden

IEEE Transactions on Biomedical Engineering, 2024

Objective: Cardiovascular diseases, and the interventions performed to treat them, can lead to changes in the shape of patient vasculatures and their hemodynamics. Computational modeling and simulations of patient-specific vascular networks are increasingly used to quantify these hemodynamic changes, but they require modifying the shapes of the models. Existing methods to modify these shapes include editing 2D lumen contours prescribed along vessel centerlines and deforming meshes with geometry-based approaches. However, these methods can require extensive by-hand prescription of the desired shapes and often do not work robustly across a range of vascular anatomies. To overcome these limitations, we develop techniques to modify vascular models using physics-based principles that can automatically generate smooth deformations and readily apply them across different vascular anatomies.

Methods: We adapt Regularized Kelvinlets, analytical solutions to linear elastostatics, to perform elastic shape-editing of vascular models. The Kelvinlets are packaged into three methods that allow us to artificially create aneurysms, stenoses, and tortuosity.

Results: Our methods are able to generate such geometric changes across a wide range of vascular anatomies. We demonstrate their capabilities by creating sets of aneurysms, stenoses, and tortuosities with varying shapes and sizes on multiple patient-specific models.

Conclusion: Our Kelvinlet-based deformers allow us to edit the shape of vascular models, regardless of their anatomical locations, and parametrically vary the size of the geometric changes.

Significance: These methods will enable researchers to more easily perform virtual-surgery-like deformations, computationally explore the impact of vascular shape on patient hemodynamics, and generate synthetic geometries for data-driven research.

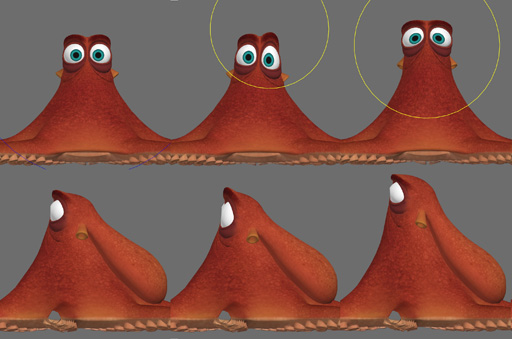

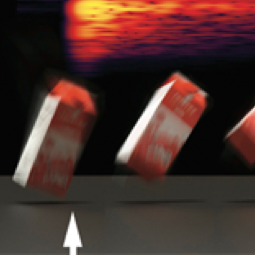

ViCMA: Visual Control of Multibody Animations

Doug L. James | David I.W. Levin

ACM SIGGRAPH Asia 2023

Motion control of large-scale, multibody physics animations with contact is difficult. Existing approaches, such as those based on optimization, are computationally daunting, and, as the number of interacting objects increases, can fail to find satisfactory solutions. We present a new, complementary method for the visual control of multibody animations that exploits object motion and visibility, and has overall cost comparable to a single simulation. Our method is highly practical, and is demonstrated on numerous large-scale, contact-rich examples involving both rigid and deformable bodies.

Progressive Shell Quasistatics for Unstructured Meshes

Jiayi Eris Zhang | Jérémie Dumas | Yun (Raymond) Fei | Alec Jacobson | Doug L. James | Danny M. Kaufman

ACM Transactions on Graphics (SIGGRAPH Asia 2023)

Thin shell structures exhibit complex behaviors critical for modeling and design across wide-ranging applications. Capturing their mechanical response requires finely detailed, high-resolution meshes. Corresponding simulations for predicting equilibria with these meshes are expensive, whereas coarse-mesh simulations can be fast but generate unacceptable artifacts and inaccuracies. The recently proposed progressive simulation framework [Zhang et al. 2022] offers a promising avenue to address these limitations with consistent and progressively improving simulation over a hierarchy of increasingly higher-resolution models. Unfortunately, it is currently severely limited in application to meshes and shapes generated via Loop subdivision.

We propose Progressive Shells Quasistatics to extend progressive simulation to the high-fidelity modeling and design of all input shell (and plate) geometries with unstructured (as well as structured) triangle meshes. To do so, we construct a fine-to-coarse hierarchy with a novel nonlinear prolongation operator custom-suited for curved-surface simulation that is rest-shape preserving, supports complex curved boundaries, and enables the reconstruction of detailed geometries from coarse-level meshes. Then, to enable convergent, high-quality solutions with robust contact handling, we propose a new, safe, and efficient shape-preserving upsampling method that ensures non-intersection and strain limits during refinement. With these core contributions, Progressive Shell Quasistatics enables, for the first time, wide generality for progressive simulation, including support for arbitrary curved-shell geometries, progressive collision objects, curved boundaries, and unstructured triangle meshes – all while ensuring that preview and final solutions remain free of intersections. We demonstrate these features across a wide range of stress tests where progressive simulation captures the wrinkling, folding, twisting, and buckling behaviors of frictionally contacting thin shells with orders-of-magnitude speed-up in examples over direct fine-resolution simulation.

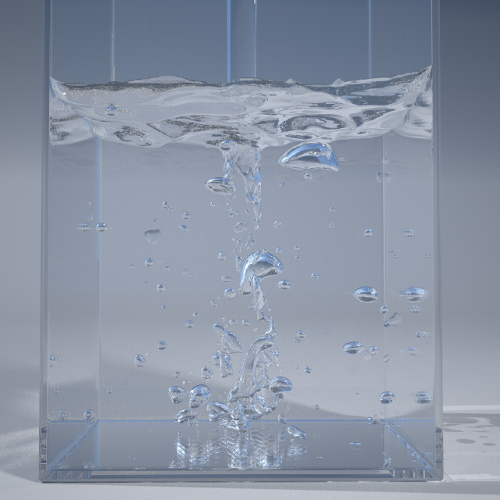

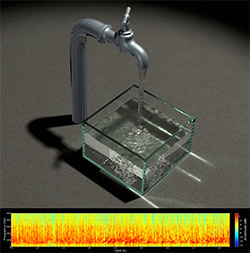

Improved Water Sound Synthesis Using Coupled Bubbles

Kangrui Xue | Ryan M. Aronson | Jui-Hsien Wang | Timothy R. Langlois | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2023)

We introduce a practical framework for synthesizing bubble-based water sounds that captures the rich inter-bubble coupling effects responsible for low-frequency acoustic emissions from bubble clouds. We propose coupled bubble oscillator models with regularized singularities, and techniques to reduce the computational cost of time stepping with dense, time-varying mass matrices. Airborne acoustic emissions are estimated using finite-difference time-domain (FDTD) methods. We propose a simple, analytical surface acceleration model, and a sample-and-hold GPU wavesolver that is simple and faster than prior CPU wavesolvers. Sound synthesis results are demonstrated using bubbly flows from incompressible, two-phase simulations, as well as procedurally generated examples using single-phase FLIP fluid animations. Our results demonstrate sound simulations with hundreds of thousands of bubbles, and perceptually significant frequency transformations with fuller low-frequency content.

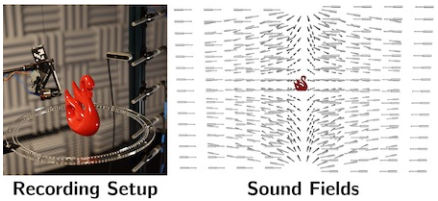

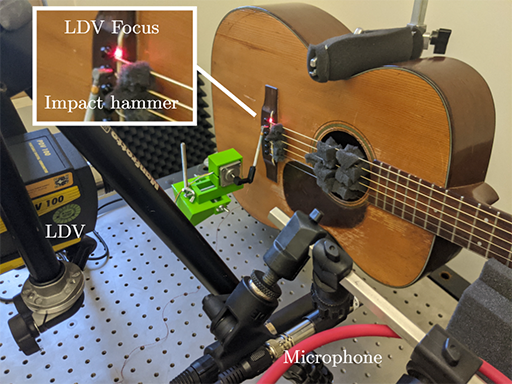

RealImpact: A Dataset of Impact Sound Fields for Real Objects

Samuel Clarke | Ruohan Gao | Mason Wang | Mark Rau | Julia Xu | Jui-Hsien Wang | Doug James | Jiajun Wu

Highlighted at IEEE International Conference on Computer Vision and Pattern Recognition (CVPR) 2023

Objects make unique sounds under different perturbations, environment conditions, and poses relative to the listener. While prior works have modeled impact sounds and sound propagation in simulation, we lack a standard dataset of impact sound fields of real objects for audiovisual learning and calibration of the sim-to-real gap. We present REALIMPACT, a large-scale dataset of real object impact sounds recorded under controlled conditions. REALIMPACT contains 150,000 recordings of impact sounds of 50 everyday objects with detailed annotations, including their impact locations, microphone locations, contact force profiles, material labels, and RGBD images. We make preliminary attempts to use our dataset as a reference to current simulation methods for estimating object impact sounds that match the real world. Moreover, we demonstrate the usefulness of our dataset as a testbed for acoustic and audio-visual learning via the evaluation of two benchmark tasks, including listener location classification and visual acoustic matching.

Progressive Simulation for Cloth Quasistatics

Jiayi Eris Zhang | Jérémie Dumas | Yun (Raymond) Fei | Alec Jacobson | Doug L. James | Danny M. Kaufman

ACM Transactions on Graphics (SIGGRAPH Asia 2022)

The trade-off between speed and fidelity in cloth simulation is a fundamental computational problem in computer graphics and computational design. Coarse cloth models provide the interactive performance required by designers, but they can not be simulated at higher resolutions ("up-resed") without introducing simulation artifacts and/or unpredicted outcomes, such as different folds, wrinkles and drapes. But how can a coarse simulation predict the result of an unconstrained, high-resolution simulation that has not yet been run?

We propose Progressive Cloth Simulation (PCS), a new forward simulation method for efficient preview of cloth quasistatics on exceedingly coarse triangle meshes with consistent and progressive improvement over a hierarchy of increasingly higher-resolution models. PCS provides an efficient coarse previewing simulation method that predicts the coarse-scale folds and wrinkles that will be generated by a corresponding converged, high-fidelity C-IPC simulation of the cloth drape's equilibrium. For each preview PCS can generate an increasing-resolution sequence of consistent models that progress towards this converged solution. This successive improvement can then be interrupted at any point, for example, whenever design parameters are updated. PCS then ensures feasibility at all resolutions, so that predicted solutions remain intersection-free and capture the complex folding and buckling behaviors of frictionally contacting cloth.

svMorph: Interactive Geometry-Editing Tools for Virtual Patient-Specific Vascular Anatomies

Jonathan Pham | Sofia Wyetzner | Martin R. Pfaller | David W. Parker | Doug L. James | Alison L. Marsden

J Biomech Eng. Mar 2023, 145(3): 031001 (8 pages)

We propose svMorph, a framework for interactive virtual sculpting of patient-specific vascular anatomic models. Our framework includes three tools for the creation of tortuosity, aneurysms, and stenoses in tubular vascular geometries. These shape edits are performed via geometric operations on the surface mesh and vessel centerline curves of the input model. The tortuosity tool also uses the physics-based Oriented Particles method, coupled with linear blend skinning, to achieve smooth, elastic-like deformations. Our tools can be applied separately or in combination to produce simulation-suitable morphed models. They are also compatible with popular vascular modeling software, such as simvascular. To illustrate our tools, we morph several image-based, patient-specific models to create a range of shape changes and simulate the resulting hemodynamics via three-dimensional, computational fluid dynamics. We also demonstrate the ability to quickly estimate the hemodynamic effects of the shape changes via the automated generation of associated zero-dimensional lumped-parameter models.

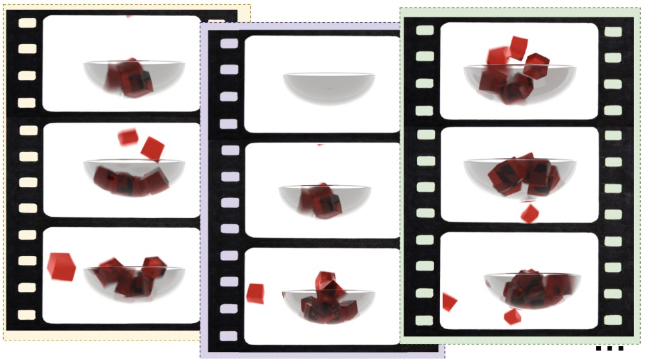

Unified Many-Worlds Browsing of Arbitrary Physics-Based Animations

Purvi Goel | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2022)

Manually tuning physics-based animation parameters to explore a simulation outcome space or achieve desired motion outcomes can be notoriously tedious. Unfortunately, this problem has motivated many sophisticated and specialized optimization-based methods for fine-grained (keyframe) control, each of which are typically limited to specific animation phenomena, usually complicated, and, unfortunately, not widely used. In this paper, we propose Unified Many-Worlds Browsing (UMWB), a practical method for sample-level control and exploration of arbitrary physics-based animations. Our approach supports browsing of large simulation ensembles of arbitrary animation phenomena by using a unified volumetric WorldPack representation based on spatiotemporally compressed voxel data associated with geometric occupancy and other low-fidelity animation state. Beyond memory reduction, the WorldPack representation also enables unified query support for interactive browsing: it provides fast evaluation of approximate spatiotemporal queries, such as occupancy tests that find ensemble samples (“worlds”) where material is either IN or NOT IN a user-specified spacetime region. The WorldPack representation also supports real-time hardware-accelerated voxel rendering by exploiting the spatially hierarchical and temporal RLE raster data structure to accelerate GPU ray tracing of compressed animations. Our UMWB implementation supports interactive browsing (and offline refinement) of ensembles containing thousands of simulation samples, and fast spatiotemporal queries and ranking. We show UMWB results using a wide variety of different physics-based animation phenomena---not just Jell-O.

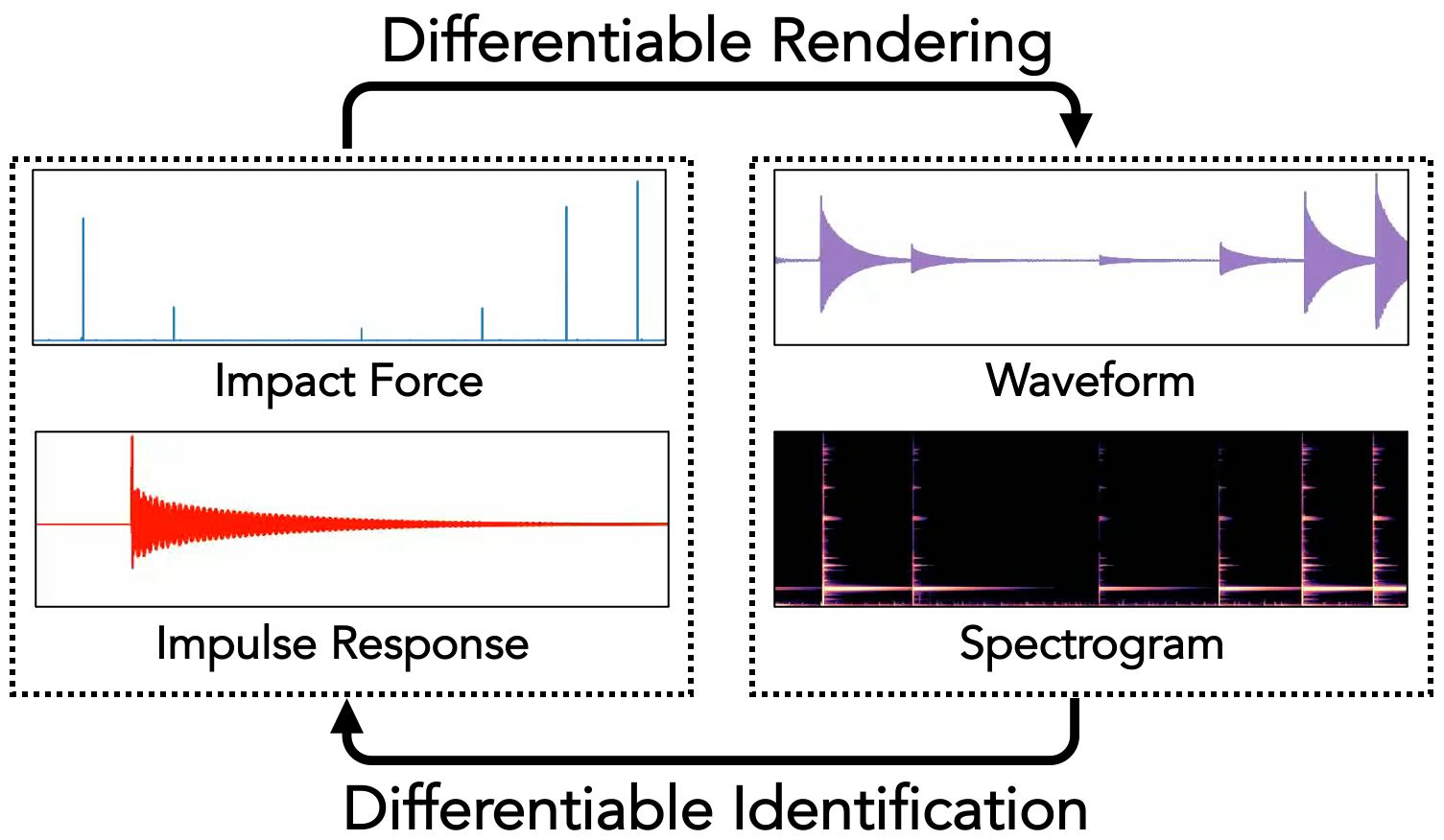

DiffImpact: Differentiable Rendering and Identification of Impact Sounds

Samuel Clarke | Negin Heravi | Mark Rau | Ruohan Gao | Jiajun Wu | Doug James | Jeannette Bohg

Conference on Robot Learning, 2021

Rigid objects make distinctive sounds during manipulation. These sounds are a function of object features, such as shape and material, and of contact forces during manipulation. Being able to infer from sound an object's acoustic properties, how it is being manipulated, and what events it is participating in could augment and complement what robots can perceive from vision, especially in case of occlusion, low visual resolution, poor lighting, or blurred focus. Annotations on sound data are rare. Therefore, existing inference systems mostly include a sound renderer in the loop, and use analysis-by-synthesis to optimize for object acoustic properties. Optimizing parameters with respect to a non-differentiable renderer is slow and hard to scale to complex scenes. We present DiffImpact, a fully differentiable model for sounds rigid objects make during impacts, based on physical principles of impact forces, rigid object vibration, and other acoustic effects. Its differentiability enables gradient-based, efficient joint inference of acoustic properties of the objects and characteristics and timings of each individual impact. DiffImpact can also be plugged in as the decoder of an autoencoder, and trained end-to-end on real audio data, so that the encoder can learn to solve the inverse problem in a self-supervised way. Experiments demonstrate that our model's physics-based inductive biases make it more resource efficient and expressive than state-of-the-art pure learning-based alternatives, on both forward rendering of impact sounds and inverse tasks such as acoustic property inference and blind source separation of impact sounds.

Electric-to-acoustic pickup processing for string instruments: An experimental study of the guitar with a hexaphonic pickup

Mark Rau | Jonathan S. Abel | Doug James | Julius O. Smith, III

The Journal of the Acoustical Society of America (JASA), 2021

A signal processing method to impart the response of an acoustic string instrument to an electric instrument which includes frequency-dependent string decay alterations is proposed. This type of processing is relevant when trying to make a less resonant instrument, such as an electric guitar, sound similar to a more resonant instrument, such as acoustic guitar. Unlike previous methods which typically only perform equalization, our method includes detailed physics-based string damping changes by using a time-varying filter which adds frequency-dependent exponential damping. Efficient digital filters are fit to bridge admittance measurements of an acoustic instrument and used to create equalization filters as well as damping correction filters. The damping correction filters are designed to work in real time as they are triggered by onset and pitch detection of the signal measured through an under-saddle pickup to determine the intensity of the damping. A test case is presented in which an electric guitar is processed to model a measured acoustic guitar.

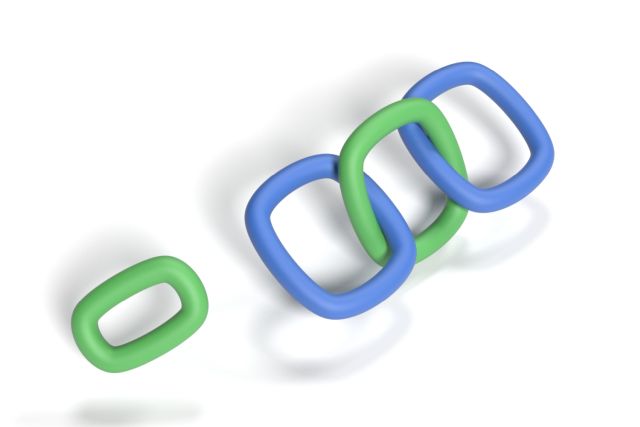

Fast Linking Numbers for Topology Verification of Loopy Structures

Ante Qu | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2021)

It is increasingly common to model, simulate, and process complex materials based on loopy structures, such as in yarn-level cloth garments, which possess topological constraints between inter-looping curves. While the input model may satisfy specific topological linkages between pairs of closed loops, subsequent processing may violate those topological conditions. In this paper, we explore a family of methods for efficiently computing and verifying linking numbers between closed curves, and apply these to applications in geometry processing, animation, and simulation, so as to verify that topological invariants are preserved during and after processing of the input models. Our method has three stages: (1) we identify potentially interacting loop-loop pairs, then (2) carefully discretize each loop's spline curves into line segments so as to enable (3) efficient linking number evaluation using accelerated kernels based on either counting projected segment-segment crossings, or by evaluating the Gauss linking integral using direct or fast summation methods (Barnes-Hut or fast multipole methods). We evaluate CPU and GPU implementations of these methods on a suite of test problems, including yarn-level cloth and chainmail, that involve significant processing: physics-based relaxation and animation, user-modeled deformations, curve compression and reparameterization. We show that topology errors can be efficiently identified to enable more robust processing of loopy structures.

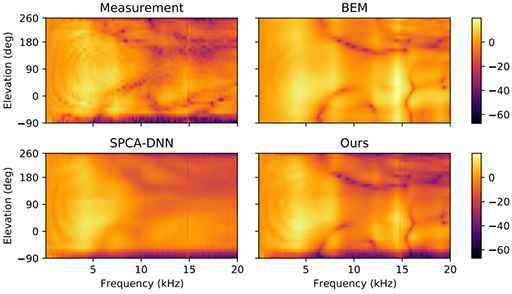

Personalized HRTF modeling using DNN-augmented BEM

Mengfan Zhang | Jui-Hsien Wang | Doug L. James

IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2021

Accurate modeling of personalized head-related transfer functions (HRTFs) is difficult but critical for applications requiring spatial audio. However, this remains challenging as experimental measurements require specialized equipment, numerical simulations require accurate head geometries and robust solvers, and data-driven methods are hungry for data. In this paper, we propose a new deep learning method that combines measurements and numerical simulations to take the best of three worlds. By learning the residual difference and establishing a high quality spatial basis, our method achieves consistently 2 dB to 2.5 dB lower spectral distortion (SD) compared to the state-of-the-art methods.

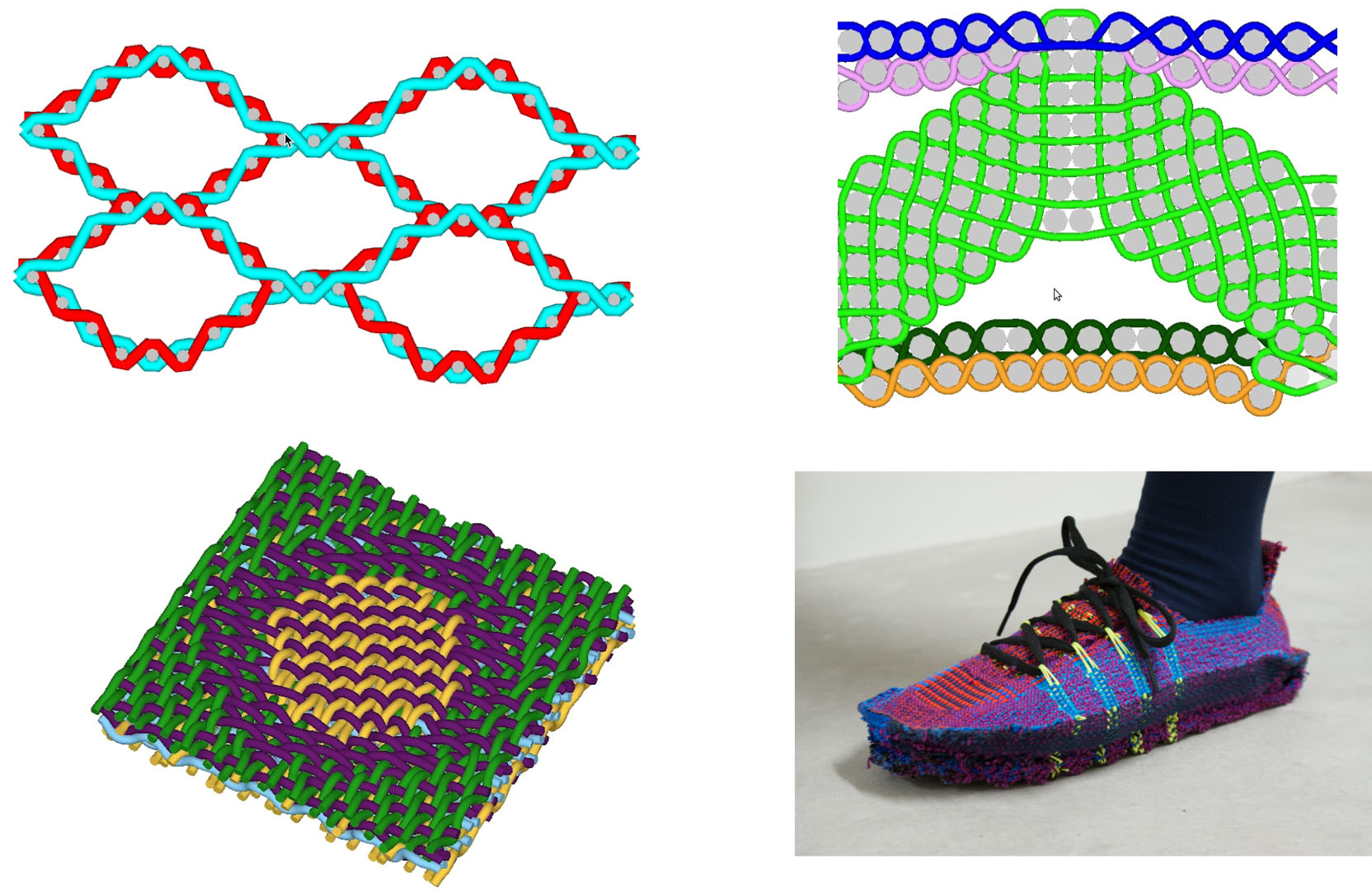

Weavecraft: An interactive design and simulation tool for 3D weaving

Rundong Wu | Joy Xiaoji Zhang | Jonathan Leaf | Xinru Hua | Ante Qu | Claire Harvey | Emily Holtzman | Joy Ko | Brooks Hagan | Doug L. James | François Guimbretière | Steve Marschner

ACM Transactions on Graphics (SIGGRAPH Asia 2020)

3D weaving is an emerging technology for manufacturing multilayer woven textiles. In this work, we present Weavecraft: an interactive, simulation-based design tool for 3D weaving. Unlike existing textile software that uses 2D representations for design patterns, we propose a novel weave block representation that helps the user to understand 3D woven structures and to create complex multi-layered patterns. With Weavecraft, users can create blocks either from scratch or by loading traditional weaves, compose the blocks into large structures, and edit the pattern at various scales. Furthermore, users can verify the design with a physically based simulator, which predicts and visualizes the geometric structure of the woven material and reveals potential defects at an interactive rate. We demonstrate a range of results created with our tool, from simple two-layer cloth and well known 3D structures to a more sophisticated design of a 3D woven shoe, and we evaluate the effectiveness of our system via a formative user study.

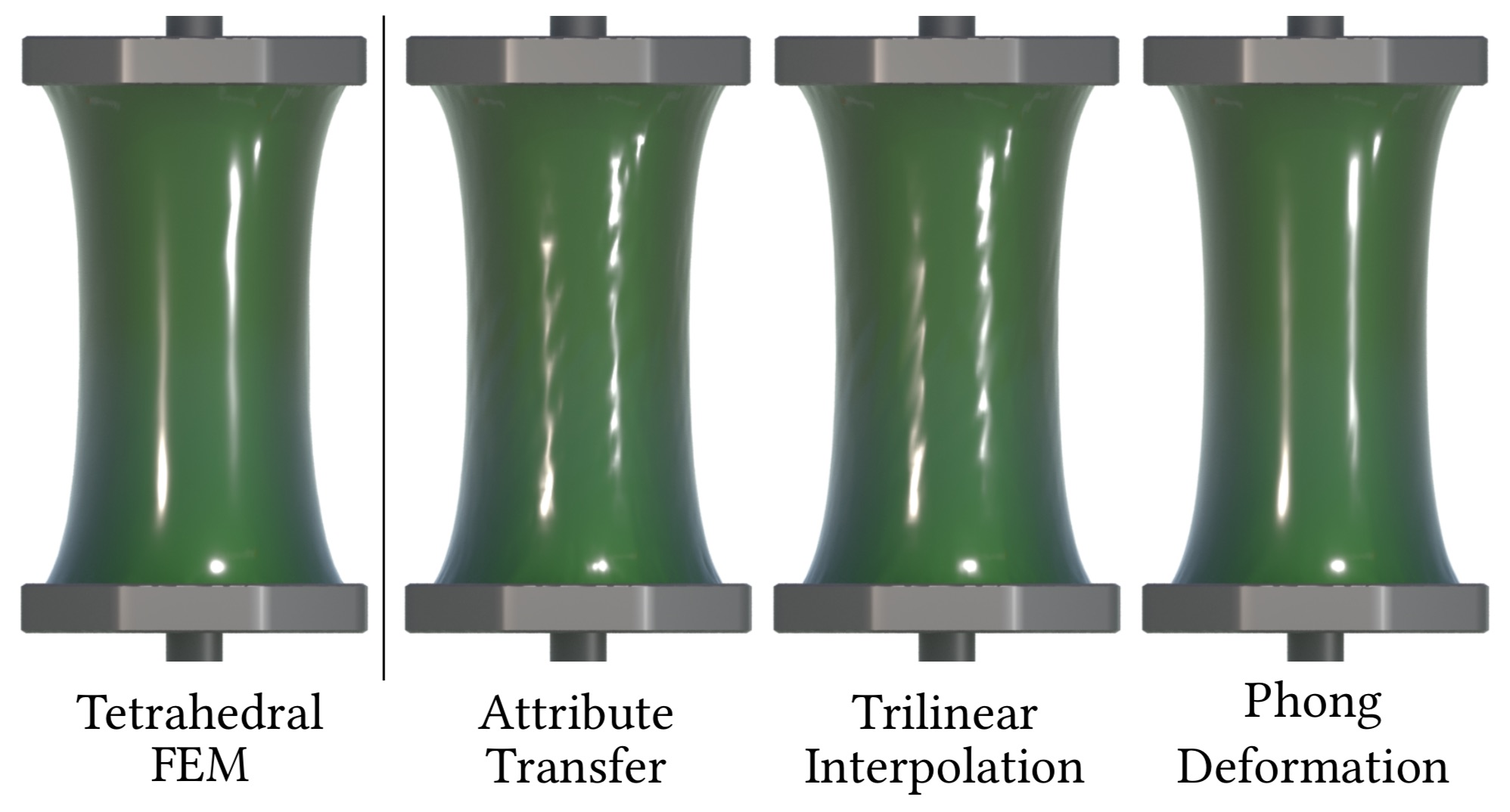

Phong Deformation: A better C0 interpolant for embedded deformation

Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2020)

Physics-based simulations of deforming tetrahedral meshes are widely used to animate detailed embedded geometry. Unfortunately most practitioners still use linear interpolation (or other low-order schemes) on tetrahedra, which can produce undesirable visual artifacts, e.g., faceting and shading artifacts, that necessitate increasing the simulation's spatial resolution and, unfortunately, cost.

In this paper, we propose Phong Deformation, a simple, robust and practical vertex-based quadratic interpolation scheme that, while still only C0 continuous like linear interpolation, greatly reduces visual artifacts for embedded geometry. The method first averages element-based linear deformation models to vertices, then barycentrically interpolates the vertex models while also averaging with the traditional linear interpolation model. The method is a fast, robust, and easily implemented replacement for linear interpolation that produces visually better results for embedded deformation with irregular tetrahedral meshes.

On the Impact of Ground Sound

Ante Qu | Doug L. James

Digital Audio Effects (DAFx-19), 2019

Rigid-body impact sound synthesis methods often omit the ground sound. In this paper we analyze an idealized ground-sound model based on an elastodynamic halfspace, and use it to identify scenarios wherein ground sound is perceptually relevant versus when it is masked by the impacting object’s modal sound or transient acceleration noise. Our analytical model gives a smooth, closed-form expression for ground surface acceleration, which we can then use in the Rayleigh integral or in an “acoustic shader” for a finite-difference time-domain wave simulation. We find that when modal sound is inaudible, ground sound is audible in scenarios where a dense object impacts a soft ground and scenarios where the impact point has a low elevation angle to the listening point.

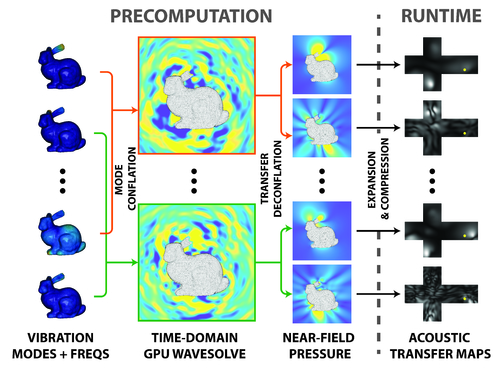

KleinPAT: Optimal Mode Conflation for Time-Domain Precomputation of Acoustic Transfer

Jui-Hsien Wang | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2019)

We propose a new modal sound synthesis method that rapidly estimates all acoustic transfer fields of a linear modal vibration model, and greatly reduces preprocessing costs. Instead of performing a separate frequency-domain Helmholtz radiation analysis for each mode, our method partitions vibration modes into chords using optimal mode conflation, then performs a single time-domain wave simulation for each chord. We then perform transfer deconflation on each chord’s time-domain radiation field using a specialized QR solver, and thereby extract the frequency-domain transfer functions of each mode. The precomputed transfer functions are represented for fast far-field evaluation, e.g., using multipole expansions. In this paper, we propose to use a single scalar-valued Far-field Acoustic Transfer (FFAT) cube map. We describe a GPU-accelerated vector wavesolver that achieves high-throughput acoustic transfer computation at accuracy sufficient for sound synthesis. Our implementation, KleinPAT, can achieve hundred- to thousand-fold speedups compared to existing Helmholtz-based transfer solvers, thereby enabling large-scale generation of modal sound models for audio-visual applications.

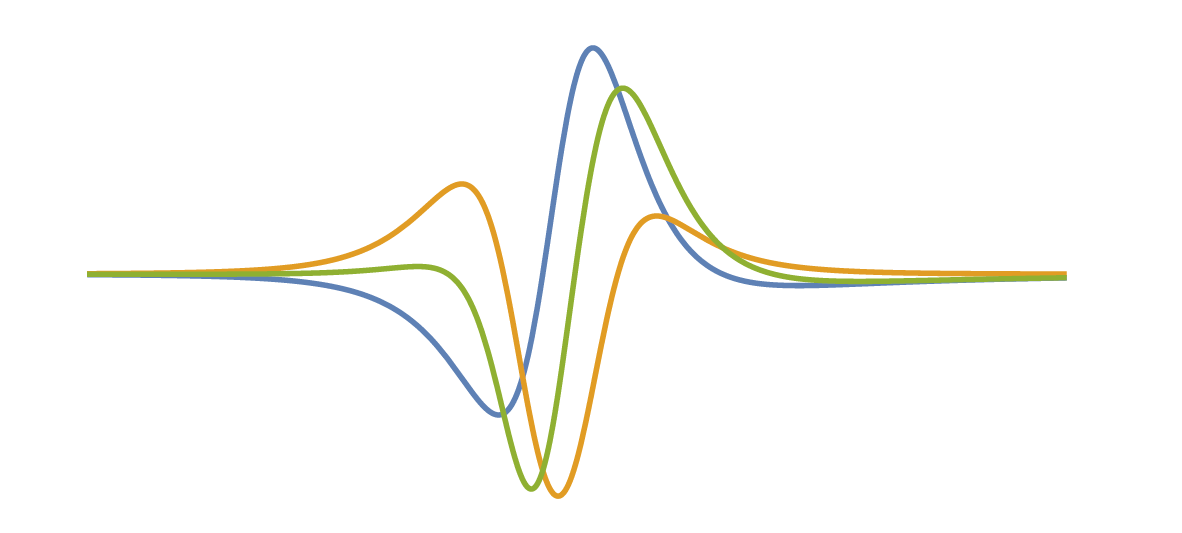

Sharp Kelvinlets: Elastic Deformations with Cusps and Localized Falloffs

Fernando de Goes | Doug L. James

DigiPro 2019

In this work, we present an extension of the regularized Kelvin-let technique suited to non-smooth, cusp-like edits. Our approach is based on a novel multi-scale convolution scheme that layers Kelvinlet deformations into a finite but spiky solution, thus offering physically based volume sculpting with sharp falloff profiles. We also show that the Laplacian operator provides a simple and effective way to achieve elastic displacements with fast far-field decay, thereby avoiding the need for multi-scale extrapolation. Finally, we combine the multi-scale convolution and Laplacian machinery to produce Sharp Kelvinlets, a new family of analytic fundamental solutions of linear elasticity with control over both the locality and the spikiness of the brush profile. Closed-form expressions and reference implementation are also provided.

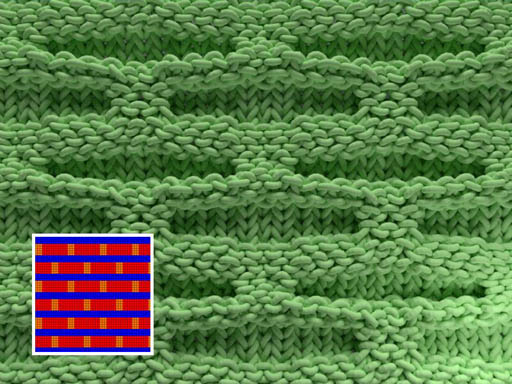

Interactive Design of Periodic Yarn-Level Cloth Patterns

Jonathan Leaf | Rundong Wu | Eston Schweickart | Doug L. James | Steve Marschner

ACM Transactions on Graphics (SIGGRAPH Asia 2018)

We describe an interactive design tool for authoring, simulating, and adjusting yarn-level patterns for knitted and woven cloth. To achieve interactive performance for notoriously slow yarn-level simulations, we propose two acceleration schemes: (a) yarn-level periodic boundary conditions that enable the restricted simulation of only small periodic patches, thereby exploiting the spatial repetition of many cloth patterns in cardinal directions, and (b) a highly parallel GPU solver for efficient yarn-level simulation of the small patch. Our system supports interactive pattern editing and simulation, and runtime modification of parameters. To adjust the amount of material used (yarn take-up) we support ``on the fly'' modification of (a) local yarn rest-length adjustments for pattern specific edits, e.g., to tighten slip stitches, and (b) global yarn length by way of a novel yarn-radius similarity transformation. We demonstrate the tool's ability to support interactive modeling, by novice users, of a wide variety of yarn-level knit and woven patterns. Finally, to validate our approach, we compare dozens of generated patterns against reference images of actual woven or knitted cloth samples, and we release this corpus of digital patterns and simulated models as a public dataset to support future comparisons.

Toward Wave-based Sound Synthesis for Computer Animation

Jui-Hsien Wang | Ante Qu | Timothy R. Langlois | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2018)

We explore an integrated approach to sound generation that supports a wide variety of physics-based simulation models and computer-animated phenomena. Targeting high-quality offline sound synthesis, we seek to resolve animation-driven sound radiation with near-field scattering and diffraction effects. The core of our approach is a sharp-interface finite-difference time-domain (FDTD) wavesolver, with a series of supporting algorithms to handle rapidly deforming and vibrating embedded interfaces arising in physics-based animation sound. Once the solver rasterizes these interfaces, it must evaluate acceleration boundary conditions (BCs) that involve model and phenomena-specific computations. We introduce acoustic shaders as a mechanism to abstract away these complexities, and describe a variety of implementations for computer animation: near-rigid objects with ringing and acceleration noise, deformable (finite element) models such as thin shells, bubble-based water, and virtual characters. Since time-domain wave synthesis is expensive, we only simulate pressure waves in a small region about each sound source, then estimate a far-field pressure signal. To further improve scalability beyond multi-threading, we propose a fully time-parallel sound synthesis method that is demonstrated on commodity cloud computing resources. In addition to presenting results for multiple animation phenomena (water, rigid, shells, kinematic deformers, etc.) we also propose 3D automatic dialogue replacement (3DADR) for virtual characters so that pre-recorded dialogue can include character movement, and near-field shadowing and scattering sound effects.

Dynamic Kelvinlets: Secondary Motions based on Fundamental Solutions of Elastodynamics

Fernando de Goes | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2018)

We introduce Dynamic Kelvinlets, a new analytical technique for real-time physically based animation of virtual elastic materials. Our formulation is based on the dynamic response to time-varying force distributions applied to an infinite elastic medium. The resulting displacements provide the plausibility of volumetric elasticity, the dynamics of compressive and shear waves, and the interactivity of closed-form expressions. Our approach builds upon the work of de Goes and James [2017] by presenting an extension of the regularized Kelvinlet solutions from elastostatics to the elastodynamic regime. To finely control our elastic deformations, we also describe the construction of compound solutions that resolve pointwise and keyframe constraints. We demonstrate the versatility and efficiency of our method with a series of examples in a production grade implementation.

Animating Elastic Rods with Sound

Eston Schweickart | Doug L. James | Steve Marschner

ACM Transactions on Graphics (SIGGRAPH 2017)

Sound generation methods, such as linear modal synthesis, can sonify a wide range of physics-based animation of solid objects, resolving vibrations and sound radiation from various structures. However, elastic rods are an important computer animation primitive for which prior sound synthesis methods, such as modal synthesis, are ill-suited for several reasons: large displacements, nonlinear vibrations, dispersion effects, and the geometrically singular nature of rods.

In this paper, we present physically based methods for simultaneous generation of animation and sound for deformable rods. We draw on Kirchhoff theory to simplify the representation of rod dynamics and introduce a generalized dipole model to calculate the spatially varying acoustic radiation. In doing so, we drastically decrease the amount of precomputation required (in some cases eliminating it completely), while being able to resolve sound radiation for arbitrary body deformations encountered in computer animation. We present several examples, including challenging scenes involving thousands of highly coupled frictional contacts.

Bounce Maps: An Improved Restitution Model for Real-Time Rigid-Body Impact

Jui-Hsien Wang | Rajsekhar Setaluri | Dinesh K. Pai | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2017)

We present a novel method to enrich standard rigid-body impact models with a spatially varying coefficient of restitution map, or Bounce Map. Even state-of-the art methods in computer graphics assume that for a single rigid body, post- and pre-impact dynamics are related with a single global, constant, namely the coefficient of restitution. We first demonstrate that this assumption is highly inaccurate, even for simple objects. We then present a technique to efficiently and automatically generate a function which maps locations on the object’s surface along with impact normals, to a scalar coefficient of restitution value. Furthermore, we propose a method for two-body restitution analysis, and, based on numerical experiments, estimate a practical model for combining one-body Bounce Map values to approximate the two-body coefficient of restitution. We show that our method not only improves accuracy, but also enables visually richer rigid-body simulations

Regularized Kelvinlets: Sculpting Brushes based on Fundamental Solutions of Elasticity

Fernando de Goes | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2017)

We introduce a new technique for real-time physically based volume sculpting of virtual elastic materials. Our formulation is based on the elastic response to localized force distributions associated with common modeling primitives such as grab, scale, twist, and pinch. The resulting brush-like displacements correspond to the regularization of fundamental solutions of linear elasticity in infinite 2D and 3D media. These deformations thus provide the realism and plausibility of volumetric elasticity, and the interactivity of closed-form analytical solutions. To finely control our elastic deformations, we also construct compound brushes with arbitrarily fast spatial decay. Furthermore, pointwise constraints can be imposed on the displacement field and its derivatives via a single linear solve. We demonstrate the versatility and efficiency of our method with multiple examples of volume sculpting and image editing.

Toward Animating Water with Complex Acoustic Bubbles

Timothy R. Langlois | Changxi Zheng | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2016)

This paper explores methods for synthesizing physics-based bubble sounds directly from two-phase incompressible simulations of bubbly water flows. By tracking fluid-air interface geometry, we identify bubble geometry and topological changes due to splitting, merging and popping. A novel capacitance-based method is proposed that can estimate volume-mode bubble frequency changes due to bubble size, shape, and proximity to solid and air interfaces. Our acoustic transfer model is able to capture cavity resonance effects due to near-field geometry, and we also propose a fast precomputed bubble-plane model for cheap transfer evaluation. In addition, we consider a bubble forcing model that better accounts for bubble entrainment, splitting, and merging events, as well as a Helmholtz resonator model for bubble popping sounds. To overcome frequency bandwidth limitations associated with coarse resolution fluid grids, we simulate micro-bubbles in the audio domain using a power-law model of bubble populations. Finally, we present several detailed examples of audiovisual water simulations and physical experiments to validate our frequency model.

Real-time sound synthesis for paper material based on geometric analysis

C. Schreck | D. Rohmer | D. James | S. Hahmann | M. Cani

Eurographics/ ACM SIGGRAPH Symposium on Computer Animation (2016)

In this article, we present the first method to generate plausible sounds while animating crumpling virtual paper in real time. Our method handles shape-dependent friction and crumpling sounds which typically occur when manipulating or creasing paper by hand. Based on a run-time geometric analysis of the deforming surface, we identify resonating regions characterizing the sound being produced. Coupled to a fast analysis of the surrounding elements, the sound can be efficiently spatialized to take into account nearby wall or table reflectors. Finally, the sound is synthesized in real time using a pre-recorded database of frequency- and time-domain sound sources. Our synthesized sounds are evaluated by comparing them to recordings for a specific set of paper deformations.

Inverse-Foley Animation: Synchronizing rigid-body motions to sound

Timothy R. Langlois | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2014)

In this paper, we introduce Inverse-Foley Animation, a technique for optimizing rigid-body animations so that contact events are synchronized with input sound events. A precomputed database of randomly sampled rigid-body contact events is used to build a contact-event graph, which can be searched to determine a plausible sequence of contact events synchronized with the input sound's events. To more easily find motions with matching contact times, we allow transitions between simulated contact events using a motion blending formulation based on modified contact impulses. We fine tune synchronization by slightly retiming ballistic motions. Given a sound, our system can synthesize synchronized motions using graphs built with hundreds of thousands of precomputed motions, and millions of contact events. Our system is easy to use, and has been used to plan motions for hundreds of sounds, and dozens of rigid-body models.

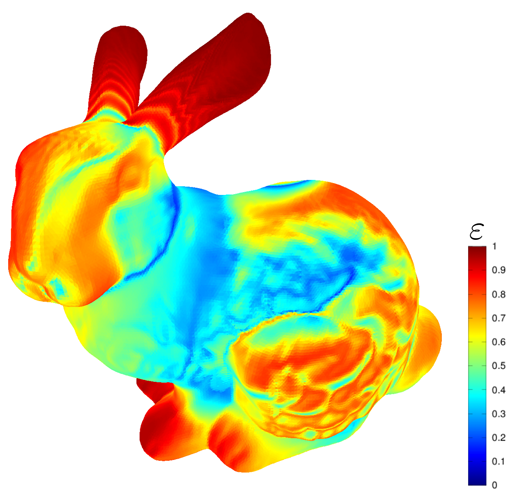

Eigenmode Compression for Modal Sound Models

Timothy R. Langlois | Steven S. An | Kelvin K. Jin | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2014)

We propose and evaluate a method for significantly compressing modal sound models, thereby making them far more practical for audiovisual applications. The dense eigenmode matrix, needed to compute the sound model's response to contact forces, can consume tens to thousands of megabytes depending on mesh resolution and mode count. Our eigenmode compression pipeline is based on nonlinear optimization of Moving Least Squares (MLS) approximations. Enhanced compression is achieved by exploiting symmetry both within and between eigenmodes, and by adaptively assigning per-mode error levels based on human perception of the far-field pressure amplitudes. Our method provides smooth eigenmode approximations, and efficient random access. We demonstrate that, in many cases, hundredfold compression ratios can be achieved without audible degradation of the rendered sound.

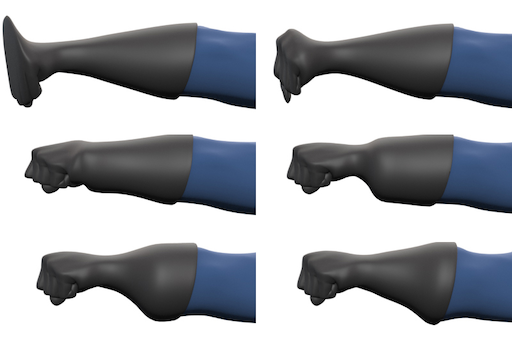

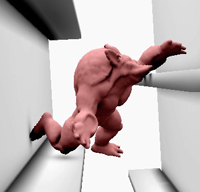

Physics-Based Character Skinning Using Multidomain Subspace Deformations

Theodore Kim | Doug L. James

IEEE TRANSACTIONS ON VISUALIZATION AND COMPUTER GRAPHICS, 2012; 18 (8): 1228-1240

In this extended version of our Symposium on Computer Animation paper, we describe a domain-decomposition method to simulate articulated deformable characters entirely within a subspace framework. We have added a parallelization and eigendecomposition performance analysis, and several additional examples to the original symposium version. The method supports quasistatic and dynamic deformations, nonlinear kinematics and materials, and can achieve interactive time-stepping rates. To avoid artificial rigidity, or “locking,” associated with coupling low-rank domain models together with hard constraints, we employ penaltybased coupling forces. The multidomain subspace integrator can simulate deformations efficiently, and exploits efficient subspace-only evaluation of constraint forces between rotated domains using a novel Fast Sandwich Transform (FST). Examples are presented for articulated characters with quasistatic and dynamic deformations, and interactive performance with hundreds of fully coupled modes. Using our method, we have observed speedups of between 3 and 4 orders of magnitude over full-rank, unreduced simulations.

Faster Acceleration Noise for Multibody Animations using Precomputed Soundbanks

Jeffrey N. Chadwick | Changxi Zheng | Doug L. James

ACM/Eurographics Symposium on Computer Animation, July 2012

We introduce an efficient method for synthesizing rigid-body acceleration noise for complex multibody scenes. Existing acceleration noise synthesis methods for animation require object-specific precomputation, which is prohibitively expensive for scenes involving rigid-body fracture or other sources of small, procedurally generated debris. We avoid precomputation by introducing a proxy-based method for acceleration noise synthesis in which precomputed acceleration noise data is only generated for a small set of ellipsoidal proxies and stored in a proxy soundbank. Our proxy model is shown to be effective at approximating acceleration noise from scenes with lots of small debris (e.g., pieces produced by rigid-body fracture). This approach is not suitable for synthesizing acceleration noise from larger objects with complicated non-convex geometry; however, it has been shown in previous work that acceleration noise from objects such as these tends to be largely masked by modal vibration sound. We manage the cost of our proxy soundbank with a new wavelet-based compression scheme for acceleration noise and use our model to significantly improve sound synthesis results for several multibody animations.

Energy-based Self-Collision Culling for Arbitrary Mesh Deformations

Changxi Zheng | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2012)

In this paper, we accelerate self-collision detection (SCD) for a deforming triangle mesh by exploiting the idea that a mesh cannot self collide unless it deforms enough. Unlike prior work on subspace self-collision culling which is restricted to low-rank deformation subspaces, our energy-based approach supports arbitrary mesh deformations while still being fast. Given a bounding volume hierarchy (BVH) for a triangle mesh, we precompute Energy-based Self- Collision Culling (ESCC) certificates on bounding-volume-related sub-meshes which indicate the amount of deformation energy required for it to self collide. After updating energy values at runtime, many bounding-volume self-collision queries can be culled using the ESCC certificates. We propose an affine-frame Laplacian-based energy definition which sports a highly optimized certificate preprocess, and fast runtime energy evaluation. The latter is performed hierarchically to amortize Laplacian energy and affine-frame estimation computations. ESCC supports both discrete and continuous SCD with detailed and nonsmooth geometry. We observe significant culling on many examples, with SCD speed-ups up to 26x.

Precomputed Acceleration Noise for Improved Rigid-Body Sound

Jeffrey N. Chadwick | Changxi Zheng | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2012)

We introduce an efficient method for synthesizing acceleration noise--sound produced when an object experiences abrupt rigidbody acceleration due to collisions or other contact events. We approach this in two main steps. First, we estimate continuous contact force profiles from rigid-body impulses using a simple model based on Hertz contact theory. Next, we compute solutions to the acoustic wave equation due to short acceleration pulses in each rigid-body degree of freedom. We introduce an efficient representation for these solutions--Precomputed Acceleration Noise--which allows us to accurately estimate sound due to arbitrary rigid-body accelerations. We find that the addition of acceleration noise significantly complements the standard modal sound algorithm, especially for small objects.

Motion-driven Concatenative Synthesis of Cloth Sounds

Steven S. An | Doug L. James | Steve Marschner

ACM Transaction on Graphics (SIGGRAPH 2012)

We present a practical data-driven method for automatically synthesizing plausible soundtracks for physics-based cloth animations running at graphics rates. Given a cloth animation, we analyze the deformations and use motion events to drive crumpling and friction sound models estimated from cloth measurements. We synthesize a low-quality sound signal, which is then used as a target signal for a concatenative sound synthesis (CSS) process. CSS selects a sequence of microsound units, very short segments, from a database of recorded cloth sounds, which best match the synthesized target sound in a low-dimensional feature-space after applying a hand-tuned warping function. The selected microsound units are con- catenated together to produce the final cloth sound with minimal filtering. Our approach avoids expensive physics-based synthesis of cloth sound, instead relying on cloth recordings and our motion-driven CSS approach for realism. We demonstrate its effectiveness on a variety of cloth animations involving various materials and character motions, including first-person virtual clothing with binaural sound.

Stitch Meshes for Modeling Knitted Clothing with Yarn-level Detail

Cem Yuksel | Jonathan M. Kaldor | Doug L. James | Steve Marschner

ACM Transactions on Graphics (SIGGRAPH 2012)

Recent yarn-based simulation techniques permit realistic and efficient dynamic simulation of knitted clothing, but producing the required yarn-level models remains a challenge. The lack of practical modeling techniques significantly limits the diversity and complexity of knitted garments that can be simulated. We propose a new modeling technique that builds yarn-level models of complex knitted garments for virtual characters. We start with a polygonal model that represents the large-scale surface of the knitted cloth. Using this mesh as an input, our interactive modeling tool produces a finer mesh representing the layout of stitches in the garment, which we call the stitch mesh. By manipulating this mesh and assigning stitch types to its faces, the user can replicate a variety of complicated knitting patterns. The curve model representing the yarn is generated from the stitch mesh, then the final shape is computed by a yarn-level physical simulation that locally relaxes the yarn into realistic shape while preserving global shape of the garment and avoiding “yarn pull-through,” thereby producing valid yarn geometry suitable for dynamic simulation. Using our system, we can efficiently create yarn-level models of knitted clothing with a rich variety of patterns that would be completely impractical to model using traditional techniques. We show a variety of example knitting patterns and full-scale garments produced using our system.

Fabricating Articulated Characters from Skinned Meshes

Moritz Beacher | Bernd Bickel | Doug L. James | Hanspeter Pfister

ACM Transactions on Graphics (SIGGRAPH 2012)

Articulated deformable characters are widespread in computer animation. Unfortunately, we lack methods for their automatic fabrication using modern additive manufacturing (AM) technologies. We propose a method that takes a skinned mesh as input, then estimates a fabricatable single-material model that approximates the 3D kinematics of the corresponding virtual articulated character in a piecewise linear manner. We first extract a set of potential joint locations. From this set, together with optional, user-specified range constraints, we then estimate mechanical friction joints that satisfy inter-joint non-penetration and other fabrication constraints. To avoid brittle joint designs, we place joint centers on an approximate medial axis representation of the input geometry, and maximize each joint’s minimal cross-sectional area. We provide several demonstrations, manufactured as single, assembled pieces using 3D printers.

Animating Fire with Sound

Jeffrey N. Chadwick | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2011)

We propose a practical method for synthesizing plausible fire sounds that are synchronized with physically based fire animations. To enable synthesis of combustion sounds without incurring the cost of time-stepping fluid simulations at audio rates, we decompose our synthesis procedure into two components. First, a low-frequency flame sound is synthesized using a physically based combustion sound model driven with data from a visual flame simulation run at a relatively low temporal sampling rate. Second, we propose two bandwidth extension methods for synthesizing additional high-frequency flame sound content: (1) spectral bandwidth extension which synthesizes higher-frequency noise matching combustion sound spectra from theory and experiment; and (2) data-driven texture synthesis to synthesize high-frequency content based on input flame sound recordings. Various examples and comparisons are presented demonstrating plausible flame sounds, from small candle flames to large flame jets.

Toward High-Quality Modal Contact Sound

Changxi Zheng | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2011)

Contact sound models based on linear modal analysis are commonly used with rigid body dynamics. Unfortunately, treating vibrating objects as "rigid" during collision and contact processing fundamentally limits the range of sounds that can be computed, and contact solvers for rigid body animation can be ill-suited for modal contact sound synthesis, producing various sound artifacts. In this paper, we resolve modal vibrations in both collision and frictional contact processing stages, thereby enabling non-rigid sound phenomena such as micro-collisions, vibrational energy exchange, and chattering. We propose a frictional multibody contact formulation and modified Staggered Projections solver which is well-suited to sound rendering and avoids noise artifacts associated with spatial and temporal contact-force fluctuations which plague prior methods. To enable practical animation and sound synthesis of numerous bodies with many coupled modes, we propose a novel asynchronous integrator with mode-level adaptivity built into the frictional contact solver. Vibrational contact damping is modeled to approximate contact-dependent sound dissipation. Results are provided that demonstrate high-quality contact resolution with sound.

Physics-based Character Skinning using Multi-Domain Subspace Deformations

Theodore Kim | Doug L. James

ACM SIGGRAPH / Eurographics Symposium on Computer Animation, August 2011 (best paper award)

We propose a domain-decomposition method to simulate articulated deformable characters entirely within a subspace framework. The method supports quasistatic and dynamic deformations, nonlinear kinematics and materials, and can achieve interactive time-stepping rates. To avoid artificial rigidity, or "locking," associated with coupling low-rank domain models together with hard constraints, we employ penalty-based coupling forces. The multi-domain subspace integrator can simulate deformations efficiently, and exploits efficient subspace-only evaluation of constraint forces between rotated domains using the so-called Fast Sandwich Transform (FST). Examples are presented for articulated characters with quasistatic and dynamic deformations, and interactive performance with hundreds of fully coupled modes. Using our method, we have observed speedups of between three and four orders of magnitude over full-rank, unreduced simulations.

Rigid-Body Fracture Sound with Precomputed Soundbanks

Changxi Zheng | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2010)

We propose a physically based algorithm for synthesizing sounds synchronized with brittle fracture animations. Motivated by laboratory experiments, we approximate brittle fracture sounds using time-varying rigid-body sound models. We extend methods for fracturing rigid materials by proposing a fast quasistatic stress solver to resolve near-audio-rate fracture events, energy-based fracture pattern modeling and estimation of “crack”-related fracture impulses. Multipole radiation models provide scalable sound radiation for complex debris and level of detail control. To reduce soundmodel generation costs for complex fracture debris, we propose Precomputed Rigid-Body Soundbanks comprised of precomputed ellipsoidal sound proxies. Examples and experiments are presented that demonstrate plausible and affordable brittle fracture sounds.

Subspace Self-Collision Culling

Jernej Barbic | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2010)

We show how to greatly accelerate self-collision detection (SCD) for reduced deformable models. Given a triangle mesh and a set of deformation modes, our method precomputes Subspace Self-Collision Culling (SSCC) certificates which, if satisfied, prove the absence of self-collisions for large parts of the model. At runtime, bounding volume hierarchies augmented with our certificates can aggressively cull overlap tests and reduce hierarchy updates. Our method supports both discrete and continuous SCD, can handle complex geometry, and makes no assumptions about geometric smoothness or normal bounds. It is particularly effective for simulations with modest subspace deformations, where it can often verify the absence of self-collisions in constant time. Our certificates enable low amortized costs, in time and across many objects in multi-body dynamics simulations. Finally, SSCC is effective enough to support self-collision tests at audio rates, which we demonstrate by producing the first sound simulations of clattering objects.

Efficient Yarn-based Cloth with Adaptive Contact Linearization

Jonathan Kaldor | Doug L. James | Steve Marschner

ACM Transactions on Graphics (SIGGRAPH 2010)

Yarn-based cloth simulation can improve visual quality but at high computational costs due to the reliance on numerous persistent yarn-yarn contacts to generate material behavior. Finding so many contacts in densely interlinked geometry is a pathological case for traditional collision detection, and the sheer number of contact interactions makes contact processing the simulation bottleneck. In this paper, we propose a method for approximating penalty-based contact forces in yarn-yarn collisions by computing the exact contact response at one time step, then using a rotated linear force model to approximate forces in nearby deformed configurations. Because contacts internal to the cloth exhibit good temporal coherence, sufficient accuracy can be obtained with infrequent updates to the approximation, which are done adaptively in space and time. Furthermore, by tracking contact models we reduce the time to detect new contacts. The end result is a 7- to 9-fold speedup in contact processing and a 4- to 5-fold overall speedup, enabling simulation of character-scale garments.

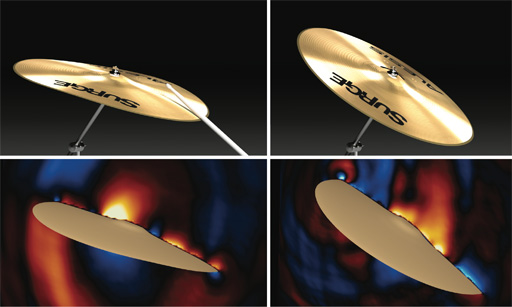

Harmonic Shells: A Practical Nonlinear Sound Model for Near-Rigid Thin Shells

Jeffrey Chadwick | Steven An | Doug L. James

ACM Transactions on Graphics (SIGGRAPH ASIA 2009)

We propose a procedural method for synthesizing realistic sounds due to nonlinear thin-shell vibrations. We use linear modal analysis to generate a small-deformation displacement basis, then couple the modes together using nonlinear thin-shell forces. To enable audio-rate time-stepping of mode amplitudes with mesh-independent cost, we propose a reduced-order dynamics model based on a thin-shell cubature scheme. Limitations such as mode locking and pitch glide are addressed. To support fast evaluation of mid-frequency mode-based sound radiation for detailed meshes, we propose far-field acoustic transfer maps (FFAT maps) which can be precomputed using state-of-the-art fast Helmholtz multipole methods. Familiar examples are presented including rumbling trash cans and plastic bottles, crashing cymbals, and noisy sheet metal objects, each with increased richness over linear modal sound models.

Skipping Steps in Deformable Simulation with Online Model Reduction

Theodore Kim | Doug L. James

ACM Transactions on Graphics (SIGGRAPH ASIA 2009)

Finite element simulations of nonlinear deformable models are computationally costly, routinely taking hours or days to compute the motion of detailed meshes. Dimensional model reduction can make simulations orders of magnitude faster, but is unsuitable for general deformable body simulations because it requires expensive precomputations, and it can suppress motion that lies outside the span of a pre-specified low-rank basis. We present an online model reduction method that does not have these limitations. In lieu of precomputation, we analyze the motion of the full model as the simulation progresses, incrementally building a reduced-order nonlinear model, and detecting when our reduced model is capable of performing the next timestep. For these subspace steps, full-model computation is “skipped” and replaced with a very fast (on the order of milliseconds) reduced order step. We present algorithms for both dynamic and quasistatic simulations, and a “throttle” parameter that allows a user to trade off between faster, approximate previews and slower, more conservative results. For detailed meshes undergoing low-rank motion, we have observed speedups of over an order of magnitude with our method.

Harmonic Fluids

Changxi Zheng | Doug L. James

ACM Transaction on Graphics (SIGGRAPH 2009)

Fluid sounds, such as splashing and pouring, are ubiquitous and familiar but we lack physically based algorithms to synthesize them in computer animation or interactive virtual environments. We propose a practical method for automatic procedural synthesis of synchronized harmonic bubble-based sounds from 3D fluid animations. To avoid audio-rate time-stepping of compressible fluids, we acoustically augment existing incompressible fluid solvers with particle-based models for bubble creation, vibration, advection, and radiation. Sound radiation from harmonic fluid vibrations is modeled using a time-varying linear superposition of bubble oscillators. We weight each oscillator by its bubble-to-ear acoustic transfer function, which is modeled as a discrete Green's function of the Helmholtz equation. To solve potentially millions of 3D Helmholtz problems, we propose a fast dual-domain multipole boundary-integral solver, with cost linear in the complexity of the fluid domain's boundary. Enhancements are proposed for robust evaluation, noise elimination, acceleration, and parallelization. Examples of harmonic fluid sounds are provided for water drops, pouring, babbling, and splashing phenomena, often with thousands of acoustic bubbles, and hundreds of thousands of transfer function solves.

Optimizing Cubature for Efficient Integration of Subspace Deformations

Steven An | Theodore Kim | Doug L. James

ACM Transactions on Graphics (SIGGRAPH ASIA 2008)

We propose an efficient scheme for evaluating nonlinear subspace forces (and Jacobians) associated with subspace deformations. The core problem we address is efficient integration of the subspace force density over the 3D spatial domain. Similar to Gaussian quadrature schemes that efficiently integrate functions that lie in particular polynomial subspaces, we propose cubature schemes (multi-dimensional quadrature) optimized for efficient integration of force densities associated with particular subspace deformations, particular materials, and particular geometric domains. We support generic subspace deformation kinematics, and nonlinear hyperelastic materials. For an r-dimensional deformation subspace with O(r) cubature points, our method is able to evaluate subspace forces at O(r^2) cost. We also describe composite cubature rules for runtime error estimation. Results are provided for various subspace deformation models, several hyperelastic materials (St.Venant-Kirchhoff, Mooney-Rivlin, Arruda-Boyce), and multimodal (graphics, haptics, sound) applications. We show dramatically better efficiency than traditional Monte Carlo integration.

Staggered Projections for Frictional Contact in Multibody Systems

Danny M. Kaufman | Shinjiro Sueda | Doug L. James | Dinesh K. Pai

ACM Transactions on Graphics (SIGGRAPH ASIA 2008)

We present a new discrete, velocity-level formulation of frictional contact dynamics that reduces to a pair of coupled projections, and introduce a simple fixed-point property of the projections. This allows us to construct a novel algorithm for accurate frictional contact resolution based on a simple staggered sequence of projections. The algorithm accelerates performance using warm starts to leverage the potentially high temporal coherence between contact states and provides users with direct control over frictional accuracy. Applying this algorithm to rigid and deformable systems, we obtain robust and accurate simulations of frictional contact behavior not previously possible, at rates suitable for interactive haptic simulations, as well as large-scale animations. By construction, the proposed algorithm guarantees exact, velocity-level contact constraint enforcement and obtains long-term stable and robust integration. Examples are given to illustrate the performance, plausibility and accuracy of the obtained solutions.

Simulating Knitted Cloth at the Yarn Level

Jonathan Kaldor | Doug L. James | Steve Marschner

ACM Transactions on Graphics (SIGGRAPH 2008)

Knitted fabric is widely used in clothing because of its unique and stretchy behavior, which is fundamentally different from the behavior of woven cloth. The properties of knits come from the nonlinear, three-dimensional kinematics of long, inter-looping yarns, and despite significant advances in cloth animation we still do not know how to simulate knitted fabric faithfully. Existing cloth simulators mainly adopt elastic-sheet mechanical models inspired by woven materials, focusing less on the model itself than on important simulation challenges such as efficiency, stability, and robustness. We define a new computational model for knits in terms of the motion of yarns, rather than the motion of a sheet. Each yarn is modeled as an inextensible, yet otherwise flexible, B-spline tube. To simulate complex knitted garments, we propose an implicit-explicit integrator, with yarn inextensibility constraints imposed using efficient projections. Friction among yarns is approximated using rigid-body velocity filters, and key yarn-yarn interactions are mediated by stiff penalty forces. Our results show that this simple model predicts the key mechanical properties of different knits, as demonstrated by qualitative comparisons to observed deformations of actual samples in the laboratory, and that the simulator can scale up to substantial animations with complex dynamic motion.

Backward Steps in Rigid Body Simulation

Christopher D. Twigg | Doug L. James

ACM Transactions on Graphics (SIGGRAPH 2008)

Physically based simulation of rigid body dynamics is commonly done by time-stepping systems forward in time. In this paper, we propose methods to allow time-stepping rigid body systems backward in time. Unfortunately, reverse-time integration of rigid bodies involving frictional contact is mathematically ill-posed, and can lack unique solutions. We instead propose time-reversed rigid body integrators that can sample possible solutions when unique ones do not exist. We also discuss challenges related to dissipation-related energy gain, sensitivity to initial conditions, stacking, constraints and articulation, rolling, sliding, skidding, bouncing, high angular velocities, rapid velocity growth from micro-collisions, and other problems encountered when going against the usual flow of time.